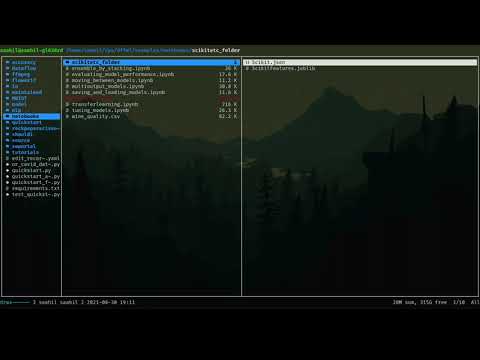

Saving and loading models¶

In this notebook, we’ll be using the red wine quality data set. The purpose of this notebook is to show how to save a trained model and load it again in a fresh session.

Import Packages¶

Let us import dffml and other packages that we might need.

[1]:

from dffml import *

import asyncio

import nest_asyncio

To use asyncio in a notebook, we need to use nest_asycio.apply()

[2]:

nest_asyncio.apply()

Build our Dataset¶

Dffml has a very convinient function cached_download() that can be used to download datasets and make sure you don’t download them if you have already.

The cached_download() has the following parameters:

`url` (str) – The URL to download

`target_path` (str, pathlib.Path) – Path on disk to store download

`expected_hash` (str) – SHA384 hash of the contents

`protocol_allowlist` (list, optional) – List of strings, one of which the URL must start with. We won't be using this in our case.

[3]:

data_path = await cached_download(

"https://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv",

"wine_quality.csv",

"789e98688f9ff18d4bae35afb71b006116ec9c529c1b21563fdaf5e785aea8b3937a55a4919c91ca2b0acb671300072c",

)

In Dffml, we try to use asynchronicity where we can, to get that extra bit of performance. Let’s use the async version of load() to load the dataset that we just downloaded into a source. We can easily achieve this by declaring a CSVSource with the data_path and the delimiter since the data we downloaded seems to have a non-comma delimiter.

After that, we can just create an array of records by loading each one through the load() function.

Feel free to also try out the no async version of load().

[4]:

async def load_dataset(data_path):

data_source = CSVSource(filename=data_path, delimiter=";")

data = [record async for record in load(data_source)]

return data

data = asyncio.run(load_dataset(data_path))

Dffml lets you visualize a record in quite a neat fashion. Lets have a look.

[5]:

print(data[0], "\n")

print(len(data))

Key: 0

Record Features

+----------------------------------------------------------------------+

| fixed acidity | 7.4 |

+----------------------------------------------------------------------+

| volatile acidity| 0.7 |

+----------------------------------------------------------------------+

| citric acid | 0 |

+----------------------------------------------------------------------+

| residual sugar | 1.9 |

+----------------------------------------------------------------------+

| chlorides | 0.076 |

+----------------------------------------------------------------------+

|free sulfur dioxi| 11 |

+----------------------------------------------------------------------+

|total sulfur diox| 34 |

+----------------------------------------------------------------------+

| density | 0.9978 |

+----------------------------------------------------------------------+

| pH | 3.51 |

+----------------------------------------------------------------------+

| sulphates | 0.56 |

+----------------------------------------------------------------------+

| alcohol | 9.4 |

+----------------------------------------------------------------------+

| quality | 5 |

+----------------------------------------------------------------------+

Prediction: Undetermined

1599

Lets split our dataset into train and test splits.

[6]:

train_data = data[320:]

test_data = data[:320]

print(len(data), len(train_data), len(test_data))

1599 1279 320

Instantiate our Models with parameters¶

Dffml makes it quite easy to load multiple models dynamically using the Model.load() function. After that, you just have to parameterize the loaded models and they are ready to train interchangably!

For this example, we’ll be demonstrating 2 models but you can feel free to try more than 2 models in a similar fashion.

[7]:

ScikitETCModel = Model.load("scikitetc")

features = Features(

Feature("fixed acidity", int, 1),

Feature("volatile acidity", int, 1),

Feature("citric acid", int, 1),

Feature("residual sugar", int, 1),

Feature("chlorides", int, 1),

Feature("free sulfur dioxide", int, 1),

Feature("total sulfur dioxide", int, 1),

Feature("density", int, 1),

Feature("pH", int, 1),

Feature("sulphates", int, 1),

Feature("alcohol", int, 1),

)

predict_feature = Feature("quality", int, 1)

model = ScikitETCModel(

features=features,

predict=predict_feature,

location="scikitetc",

n_estimators=150,

)

Train our Models¶

Finally, our models are ready to be trained using the high-level API. Let’s make sure to pass each record as a parameter by simply using the unpacking operator(*).

[8]:

await train(model, *train_data)

Model Saved!

When we train a model in DFFML, it is automatically saved on the location provided while instantiating the model.

Test our Models¶

To test our model, we’ll use the score() function in the high-level API.

We ask for the accuracy to be assessed using the Mean Squared Error method by passing “mse” to AccuracyScorer.load().

[9]:

MeanSquaredErrorAccuracy = AccuracyScorer.load("mse")

scorer = MeanSquaredErrorAccuracy()

Accuracy = await score(model, scorer, predict_feature, *test_data)

print("Accuracy:", Accuracy)

Accuracy: 0.46875

Restart kernel and load the model¶

Restart kernel and execute cells below to demonstrate loading a saved model.

[ ]:

from IPython.core.display import HTML

HTML(

"""

<script>

Jupyter.notebook.kernel.restart();

</script>

"""

)

[1]:

from IPython.core.display import HTML

HTML("<script>Jupyter.notebook.execute_cells_below()</script>")

[1]:

Import Packages¶

[2]:

from dffml import *

import asyncio

import nest_asyncio

To use asyncio in a notebook, we need to use nest_asycio.apply()

[3]:

nest_asyncio.apply()

Build our Dataset¶

[4]:

data_path = await cached_download(

"https://archive.ics.uci.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv",

"wine_quality.csv",

"789e98688f9ff18d4bae35afb71b006116ec9c529c1b21563fdaf5e785aea8b3937a55a4919c91ca2b0acb671300072c",

)

Load and split data

[5]:

async def load_dataset(data_path):

data_source = CSVSource(filename=data_path, delimiter=";")

data = [record async for record in load(data_source)]

return data

data = asyncio.run(load_dataset(data_path))

[6]:

train_data = data[320:]

test_data = data[:320]

print(len(data), len(train_data), len(test_data))

1599 1279 320

Load our saved model¶

In DFFML, we can load our previously trained model by instantiating it in the same location.

[7]:

ScikitETCModel = Model.load("scikitetc")

features = Features(

Feature("fixed acidity", int, 1),

Feature("volatile acidity", int, 1),

Feature("citric acid", int, 1),

Feature("residual sugar", int, 1),

Feature("chlorides", int, 1),

Feature("free sulfur dioxide", int, 1),

Feature("total sulfur dioxide", int, 1),

Feature("density", int, 1),

Feature("pH", int, 1),

Feature("sulphates", int, 1),

Feature("alcohol", int, 1),

)

predict_feature = Feature("quality", int, 1)

model = ScikitETCModel(

features=features,

predict=predict_feature,

location="scikitetc",

n_estimators=150,

)

Lets check if the model is already trained.

[8]:

MeanSquaredErrorAccuracy = AccuracyScorer.load("mse")

scorer = MeanSquaredErrorAccuracy()

Accuracy = await score(model, scorer, predict_feature, *test_data)

print("Accuracy:", Accuracy)

Accuracy: 0.46875

We can see that DFFML loaded the previously trained model which has the exact same accuracy.