Video Processing Procedures#

The following pseudo code shows the video processing procedure:

1MFXVideoVPP_QueryIOSurf(session, &init_param, response);

2allocate_pool_of_surfaces(in_pool, response[0].NumFrameSuggested);

3allocate_pool_of_surfaces(out_pool, response[1].NumFrameSuggested);

4MFXVideoVPP_Init(session, &init_param);

5mfxFrameSurface1 *in=find_unlocked_surface_and_fill_content(in_pool);

6mfxFrameSurface1 *out=find_unlocked_surface_from_the_pool(out_pool);

7for (;;) {

8 sts=MFXVideoVPP_RunFrameVPPAsync(session,in,out,NULL,&syncp);

9 if (sts==MFX_ERR_MORE_SURFACE || sts==MFX_ERR_NONE) {

10 MFXVideoCORE_SyncOperation(session,syncp,INFINITE);

11 process_output_frame(out);

12 out=find_unlocked_surface_from_the_pool(out_pool);

13 }

14 if (sts==MFX_ERR_MORE_DATA && in==NULL)

15 break;

16 if (sts==MFX_ERR_NONE || sts==MFX_ERR_MORE_DATA) {

17 in=find_unlocked_surface_from_the_pool(in_pool);

18 fill_content_for_video_processing(in);

19 if (end_of_stream())

20 in=NULL;

21 }

22}

23MFXVideoVPP_Close(session);

24free_pool_of_surfaces(in_pool);

25free_pool_of_surfaces(out_pool);

Note the following key points about the example:

The application uses the

MFXVideoVPP_QueryIOSurf()function to obtain the number of frame surfaces needed for input and output. The application must allocate two frame surface pools: one for the input and one for the output.The video processing function

MFXVideoVPP_RunFrameVPPAsync()is asynchronous. The application must use theMFXVideoCORE_SyncOperation()function to synchronize in order to make the output result ready.The body of the video processing procedure covers the following three scenarios:

If the number of frames consumed at input is equal to the number of frames generated at output, VPP returns

mfxStatus::MFX_ERR_NONEwhen an output is ready. The application must process the output frame after synchronization, as theMFXVideoVPP_RunFrameVPPAsync()function is asynchronous. The application must provide a NULL input at the end of the sequence to drain any remaining frames.If the number of frames consumed at input is more than the number of frames generated at output, VPP returns

mfxStatus::MFX_ERR_MORE_DATAfor additional input until an output is ready. When the output is ready, VPP returnsmfxStatus::MFX_ERR_NONE. The application must process the output frame after synchronization and provide a NULL input at the end of the sequence to drain any remaining frames.If the number of frames consumed at input is less than the number of frames generated at output, VPP returns either

mfxStatus::MFX_ERR_MORE_SURFACE(when more than one output is ready), ormfxStatus::MFX_ERR_NONE(when one output is ready and VPP expects new input). In both cases, the application must process the output frame after synchronization and provide a NULL input at the end of the sequence to drain any remaining frames.

Configuration#

Intel® VPL configures the video processing pipeline operation based on the

difference between the input and output formats, specified in the

mfxVideoParam structure. The following list shows several examples:

When the input color format is YUY2 and the output color format is NV12, Intel® VPL enables color conversion from YUY2 to NV12.

When the input is interleaved and the output is progressive, Intel® VPL enables deinterlacing.

When the input is single field and the output is interlaced or progressive, Intel® VPL enables field weaving, optionally with deinterlacing.

When the input is interlaced and the output is single field, Intel® VPL enables field splitting.

In addition to specifying the input and output formats, the application can

provide hints to fine-tune the video processing pipeline operation. The

application can disable filters in the pipeline by using the

mfxExtVPPDoNotUse structure, enable filters by using the

mfxExtVPPDoUse structure, and configure filters by using dedicated

configuration structures. See the Configurable VPP Filters table

for a complete list of configurable video processing filters, their IDs, and

configuration structures. See the ExtendedBufferID enumerator

for more details.

Intel® VPL ensures that all filters necessary to convert the input format to the

output format are included in the pipeline. Intel® VPL may skip some optional

filters even if they are explicitly requested by the application, for example

due to limitations of the underlying hardware. To notify the application about

skipped optional filters, Intel® VPL returns the mfxStatus::MFX_WRN_FILTER_SKIPPED

warning. The application can retrieve the list of active filters by attaching

the mfxExtVPPDoUse structure to the mfxVideoParam

structure and calling the MFXVideoVPP_GetVideoParam() function. The

application must allocate enough memory for the filter list.

See the Configurable VPP Filters table for a full list of configurable filters.

Filter ID |

Configuration Structure |

|---|---|

The following example shows video processing configuration:

1/* enable image stabilization filter with default settings */

2mfxExtVPPDoUse du;

3mfxU32 al=MFX_EXTBUFF_VPP_IMAGE_STABILIZATION;

4

5du.Header.BufferId=MFX_EXTBUFF_VPP_DOUSE;

6du.Header.BufferSz=sizeof(mfxExtVPPDoUse);

7du.NumAlg=1;

8du.AlgList=&al;

9

10/* configure the mfxVideoParam structure */

11mfxVideoParam conf;

12mfxExtBuffer *eb=(mfxExtBuffer *)&du;

13

14memset(&conf,0,sizeof(conf));

15conf.IOPattern=MFX_IOPATTERN_IN_SYSTEM_MEMORY | MFX_IOPATTERN_OUT_SYSTEM_MEMORY;

16conf.NumExtParam=1;

17conf.ExtParam=&eb;

18

19conf.vpp.In.FourCC=MFX_FOURCC_YV12;

20conf.vpp.Out.FourCC=MFX_FOURCC_NV12;

21conf.vpp.In.Width=conf.vpp.Out.Width=1920;

22conf.vpp.In.Height=conf.vpp.Out.Height=1088;

23

24/* video processing initialization */

25MFXVideoVPP_Init(session, &conf);

Region of Interest#

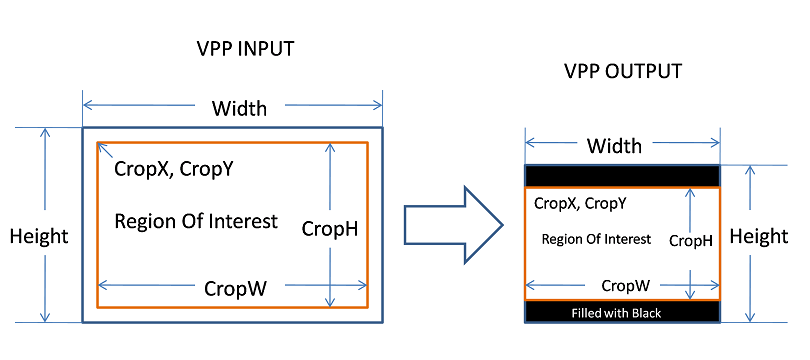

During video processing operations, the application can specify a region of interest for each frame as shown in the following figure:

VPP region of interest operation#

Specifying a region of interest guides the resizing function to achieve special

effects, such as resizing from 16:9 to 4:3, while keeping the aspect ratio intact.

Use the CropX, CropY, CropW, and CropH parameters in the

mfxVideoParam structure to specify a region of interest for each frame

when calling MFXVideoVPP_RunFrameVPPAsync(). Note: For per-frame dynamic

change, the application should set the CropX, CropY, CropW, and CropH

parameters when calling MFXVideoVPP_RunFrameVPPAsync() per frame.

The VPP Region of Interest Operations table shows examples of VPP operations applied to a region of interest.

Operation

|

VPP Input

Width X Height

|

VPP Input

CropX, CropY,

CropW, CropH

|

VPP Output

Width X Height

|

VPP Output

CropX, CropY,

CropW, CropH

|

|---|---|---|---|---|

Cropping |

720 x 480 |

16, 16, 688, 448 |

720 x 480 |

16, 16, 688, 448 |

Resizing |

720 x 480 |

0, 0, 720, 480 |

1440 x 960 |

0, 0, 1440, 960 |

Horizontal stretching |

720 x 480 |

0, 0, 720, 480 |

640 x 480 |

0, 0, 640, 480 |

16:9 4:3 with letter boxing at the top and bottom |

1920 x 1088 |

0, 0, 1920, 1088 |

720 x 480 |

0, 36, 720, 408 |

4:3 16:9 with pillar boxing at the left and right |

720 x 480 |

0, 0, 720, 480 |

1920 x 1088 |

144, 0, 1632, 1088 |

Multi-view Video Processing#

Intel® VPL video processing supports processing multiple views. For video processing

initialization, the application needs to attach the mfxExtMVCSeqDesc

structure to the mfxVideoParam structure and call the

MFXVideoVPP_Init() function. The function saves the view identifiers.

During video processing, Intel® VPL processes each view individually. Intel® VPL refers

to the FrameID field of the mfxFrameInfo structure to configure

each view according to its processing pipeline. If the video processing source

frame is not the output from the Intel® VPL MVC decoder, then the application needs to

fill the the FrameID field before calling the MFXVideoVPP_RunFrameVPPAsync()

function. This is shown in the following pseudo code:

1mfxExtBuffer *eb;

2mfxExtMVCSeqDesc seq_desc;

3mfxVideoParam init_param;

4

5init_param.ExtParam = &eb;

6init_param.NumExtParam=1;

7eb=(mfxExtBuffer *)&seq_desc;

8

9/* init VPP */

10MFXVideoVPP_Init(session, &init_param);

11

12/* perform processing */

13for (;;) {

14 MFXVideoVPP_RunFrameVPPAsync(session,in,out,NULL,&syncp);

15 MFXVideoCORE_SyncOperation(session,syncp,INFINITE);

16}

17

18/* close VPP */

19MFXVideoVPP_Close(session);

Video Processing 3DLUT#

Intel® VPL video processing supports 3DLUT with Intel HW specific memory layout. The following pseudo code

shows how to create a MFX_3DLUT_MEMORY_LAYOUT_INTEL_65LUT 3DLUT surface.

1VADisplay va_dpy = 0;

2VASurfaceID surface_id = 0;

3

4vaInitialize(va_dpy, NULL, NULL);

5

6// MFX_3DLUT_MEMORY_LAYOUT_INTEL_65LUT indicate 65*65*128*8bytes.

7mfxU32 seg_size = 65, mul_size = 128;

8mfxMemId memId = 0;

9

10// create 3DLUT surface (MFX_3DLUT_MEMORY_LAYOUT_INTEL_65LUT)

11VASurfaceAttrib surface_attrib = {};

12surface_attrib.type = VASurfaceAttribPixelFormat;

13surface_attrib.flags = VA_SURFACE_ATTRIB_SETTABLE;

14surface_attrib.value.type = VAGenericValueTypeInteger;

15surface_attrib.value.value.i = VA_FOURCC_RGBA;

16

17vaCreateSurfaces(va_dpy,

18 VA_RT_FORMAT_RGB32, // 4 bytes

19 seg_size * mul_size, // 65*128

20 seg_size * 2, // 65*2

21 &surface_id,

22 1,

23 &surface_attrib,

24 1);

25

26*((VASurfaceID*)memId) = surface_id;

27

28// configure 3DLUT parameters

29mfxExtVPP3DLut lut3DConfig;

30memset(&lut3DConfig, 0, sizeof(lut3DConfig));

31lut3DConfig.Header.BufferId = MFX_EXTBUFF_VPP_3DLUT;

32lut3DConfig.Header.BufferSz = sizeof(mfxExtVPP3DLut);

33lut3DConfig.ChannelMapping = MFX_3DLUT_CHANNEL_MAPPING_RGB_RGB;

34lut3DConfig.BufferType = MFX_RESOURCE_VA_SURFACE;

35lut3DConfig.VideoBuffer.DataType = MFX_DATA_TYPE_U16;

36lut3DConfig.VideoBuffer.MemLayout = MFX_3DLUT_MEMORY_LAYOUT_INTEL_65LUT;

37lut3DConfig.VideoBuffer.MemId = memId;

38

39// release 3DLUT surface

40vaDestroySurfaces(va_dpy, &surface_id, 1);

The following pseudo code shows how to create a system memory mfx3DLutSystemBuffer 3DLUT surface.

1// 64 size 3DLUT(3 dimension look up table)

2// The buffer size(in bytes) for every channel is 64*64*64*sizeof(DataType)

3mfxU16 dataR[64*64*64], dataG[64*64*64], dataB[64*64*64];

4mfxChannel channelR, channelG, channelB;

5channelR.DataType = MFX_DATA_TYPE_U16;

6channelR.Size = 64;

7channelR.Data16 = dataR;

8channelG.DataType = MFX_DATA_TYPE_U16;

9channelG.Size = 64;

10channelG.Data16 = dataG;

11channelB.DataType = MFX_DATA_TYPE_U16;

12channelB.Size = 64;

13channelB.Data16 = dataB;

14

15// configure 3DLUT parameters

16mfxExtVPP3DLut lut3DConfig;

17memset(&lut3DConfig, 0, sizeof(lut3DConfig));

18lut3DConfig.Header.BufferId = MFX_EXTBUFF_VPP_3DLUT;

19lut3DConfig.Header.BufferSz = sizeof(mfxExtVPP3DLut);

20lut3DConfig.ChannelMapping = MFX_3DLUT_CHANNEL_MAPPING_RGB_RGB;

21lut3DConfig.BufferType = MFX_RESOURCE_SYSTEM_SURFACE;

22lut3DConfig.SystemBuffer.Channel[0] = channelR;

23lut3DConfig.SystemBuffer.Channel[1] = channelG;

24lut3DConfig.SystemBuffer.Channel[2] = channelB;

The following pseudo code shows how to specify 3DLUT interpolation method mfx3DLutInterpolationMethod.

1#ifdef ONEVPL_EXPERIMENTAL

2// configure 3DLUT parameters

3mfxExtVPP3DLut lut3DConfig;

4memset(&lut3DConfig, 0, sizeof(lut3DConfig));

5lut3DConfig.Header.BufferId = MFX_EXTBUFF_VPP_3DLUT;

6lut3DConfig.Header.BufferSz = sizeof(mfxExtVPP3DLut);

7lut3DConfig.ChannelMapping = MFX_3DLUT_CHANNEL_MAPPING_RGB_RGB;

8lut3DConfig.BufferType = MFX_RESOURCE_SYSTEM_SURFACE;

9lut3DConfig.InterpolationMethod = MFX_3DLUT_INTERPOLATION_TETRAHEDRAL;

10#endif

HDR Tone Mapping#

Intel® VPL video processing supports HDR Tone Mapping with Intel HW. The following pseudo code shows how to perform HDR Tone Mapping.

The following pseudo code shows HDR to SDR.

1// HDR to SDR (e.g P010 HDR signal -> NV12 SDR signal) in transcoding pipeline

2// Attach input external buffers as the below for HDR input. SDR is by default, hence no

3// extra output external buffer.

4// The input Video Signal Information

5mfxExtVideoSignalInfo inSignalInfo = {};

6inSignalInfo.Header.BufferId = MFX_EXTBUFF_VIDEO_SIGNAL_INFO_IN;

7inSignalInfo.Header.BufferSz = sizeof(mfxExtVideoSignalInfo);

8inSignalInfo.VideoFullRange = 0; // Limited range P010

9inSignalInfo.ColourPrimaries = 9; // BT.2020

10inSignalInfo.TransferCharacteristics = 16; // ST2084

11

12// The content Light Level Information

13mfxExtContentLightLevelInfo inContentLight = {};

14inContentLight.Header.BufferId = MFX_EXTBUFF_CONTENT_LIGHT_LEVEL_INFO;

15inContentLight.Header.BufferSz = sizeof(mfxExtContentLightLevelInfo);

16inContentLight.MaxContentLightLevel = 4000; // nits

17inContentLight.MaxPicAverageLightLevel = 1000; // nits

18

19// The mastering display colour volume

20mfxExtMasteringDisplayColourVolume inColourVolume = {};

21inColourVolume.Header.BufferId = MFX_EXTBUFF_MASTERING_DISPLAY_COLOUR_VOLUME_IN;

22inColourVolume.Header.BufferSz = sizeof(mfxExtMasteringDisplayColourVolume);

23// Based on the needs, Please set DisplayPrimaryX/Y[3], WhitePointX/Y, and MaxDisplayMasteringLuminance,

24// MinDisplayMasteringLuminance

25

26mfxExtBuffer *ExtBufferIn[3];

27ExtBufferIn[0] = (mfxExtBuffer *)&inSignalInfo;

28ExtBufferIn[1] = (mfxExtBuffer *)&inContentLight;

29ExtBufferIn[2] = (mfxExtBuffer *)&inColourVolume;

30

31mfxSession session = (mfxSession)0;

32mfxVideoParam VPPParams = {};

33VPPParams.NumExtParam = 3;

34VPPParams.ExtParam = (mfxExtBuffer **)&ExtBufferIn[0];

35MFXVideoVPP_Init(session, &VPPParams);

The following pseudo code shows SDR to HDR.

1// SDR to HDR (e.g NV12 SDR signal -> P010 HDR signal) in transcoding pipeline

2// Attach output external buffers as the below for HDR output. SDR is by default, hence no

3// extra input external buffer.

4// The output Video Signal Information

5mfxExtVideoSignalInfo outSignalInfo = {};

6outSignalInfo.Header.BufferId = MFX_EXTBUFF_VIDEO_SIGNAL_INFO_OUT;

7outSignalInfo.Header.BufferSz = sizeof(mfxExtVideoSignalInfo);

8outSignalInfo.VideoFullRange = 0; // Limited range P010

9outSignalInfo.ColourPrimaries = 9; // BT.2020

10outSignalInfo.TransferCharacteristics = 16; // ST2084

11

12// The mastering display colour volume

13mfxExtMasteringDisplayColourVolume outColourVolume = {};

14outColourVolume.Header.BufferId = MFX_EXTBUFF_MASTERING_DISPLAY_COLOUR_VOLUME_OUT;

15outColourVolume.Header.BufferSz = sizeof(mfxExtMasteringDisplayColourVolume);

16// Based on the needs, Please set DisplayPrimaryX/Y[3], WhitePointX/Y, and MaxDisplayMasteringLuminance,

17// MinDisplayMasteringLuminance

18

19mfxExtBuffer *ExtBufferOut[2];

20ExtBufferOut[0] = (mfxExtBuffer *)&outSignalInfo;

21ExtBufferOut[2] = (mfxExtBuffer *)&outColourVolume;

22

23mfxSession session = (mfxSession)0;

24mfxVideoParam VPPParams = {};

25VPPParams.NumExtParam = 2;

26VPPParams.ExtParam = (mfxExtBuffer **)&ExtBufferOut[0];

27MFXVideoVPP_Init(session, &VPPParams);

The following pseudo code shows HDR to HDR.

1// HDR to HDR (e.g P010 HDR signal -> P010 HDR signal) in transcoding pipeline

2// Attach in/output external buffers as the below for HDR input/output.

3// The input Video Signal Information

4mfxExtVideoSignalInfo inSignalInfo = {};

5inSignalInfo.Header.BufferId = MFX_EXTBUFF_VIDEO_SIGNAL_INFO_IN;

6inSignalInfo.Header.BufferSz = sizeof(mfxExtVideoSignalInfo);

7inSignalInfo.VideoFullRange = 0; // Limited range P010

8inSignalInfo.ColourPrimaries = 9; // BT.2020

9inSignalInfo.TransferCharacteristics = 16; // ST2084

10

11// The content Light Level Information

12mfxExtContentLightLevelInfo inContentLight = {};

13inContentLight.Header.BufferId = MFX_EXTBUFF_CONTENT_LIGHT_LEVEL_INFO;

14inContentLight.Header.BufferSz = sizeof(mfxExtContentLightLevelInfo);

15inContentLight.MaxContentLightLevel = 4000; // nits

16inContentLight.MaxPicAverageLightLevel = 1000; // nits

17

18// The mastering display colour volume

19mfxExtMasteringDisplayColourVolume inColourVolume = {};

20inColourVolume.Header.BufferId = MFX_EXTBUFF_MASTERING_DISPLAY_COLOUR_VOLUME_IN;

21inColourVolume.Header.BufferSz = sizeof(mfxExtMasteringDisplayColourVolume);

22// Based on the needs, Please set DisplayPrimaryX/Y[3], WhitePointX/Y, and MaxDisplayMasteringLuminance,

23// MinDisplayMasteringLuminance

24

25mfxExtVideoSignalInfo outSignalInfo = {};

26outSignalInfo.Header.BufferId = MFX_EXTBUFF_VIDEO_SIGNAL_INFO_OUT;

27outSignalInfo.Header.BufferSz = sizeof(mfxExtVideoSignalInfo);

28outSignalInfo.VideoFullRange = 0; // Limited range P010

29outSignalInfo.ColourPrimaries = 9; // BT.2020

30outSignalInfo.TransferCharacteristics = 16; // ST2084

31

32// The mastering display colour volume

33mfxExtMasteringDisplayColourVolume outColourVolume = {};

34outColourVolume.Header.BufferId = MFX_EXTBUFF_MASTERING_DISPLAY_COLOUR_VOLUME_OUT;

35outColourVolume.Header.BufferSz = sizeof(mfxExtMasteringDisplayColourVolume);

36// Based on the needs, Please set DisplayPrimaryX/Y[3], WhitePointX/Y, and MaxDisplayMasteringLuminance,

37// MinDisplayMasteringLuminance

38

39mfxExtBuffer *ExtBuffer[5];

40ExtBuffer[0] = (mfxExtBuffer *)&inSignalInfo;

41ExtBuffer[1] = (mfxExtBuffer *)&inContentLight;

42ExtBuffer[2] = (mfxExtBuffer *)&inColourVolume;

43ExtBuffer[3] = (mfxExtBuffer *)&outSignalInfo;

44ExtBuffer[4] = (mfxExtBuffer *)&outColourVolume;

45

46mfxSession session = (mfxSession)0;

47mfxVideoParam VPPParams = {};

48VPPParams.NumExtParam = 5;

49VPPParams.ExtParam = (mfxExtBuffer **)&ExtBuffer[0];

50MFXVideoVPP_Init(session, &VPPParams);

Camera RAW acceleration#

Intel® VPL supports camera raw format processing with Intel HW. The following pseudo code

shows how to perform camera raw hardware acceleration. For pipeline processing initialization,

the application needs to attach the camera structures to the mfxVideoParam structure

and call the MFXVideoVPP_Init() function.

The following pseudo code shows camera raw processing.

1#ifdef ONEVPL_EXPERIMENTAL

2// Camera Raw Format

3mfxExtCamPipeControl pipeControl = {};

4pipeControl.Header.BufferId = MFX_EXTBUF_CAM_PIPECONTROL;

5pipeControl.Header.BufferSz = sizeof(mfxExtCamPipeControl);

6pipeControl.RawFormat = (mfxU16)MFX_CAM_BAYER_BGGR;

7

8// Black level correction

9mfxExtCamBlackLevelCorrection blackLevelCorrection = {};

10blackLevelCorrection.Header.BufferId = MFX_EXTBUF_CAM_BLACK_LEVEL_CORRECTION;

11blackLevelCorrection.Header.BufferSz = sizeof(mfxExtCamBlackLevelCorrection);

12mfxU16 black_level_B = 16, black_level_G0 = 16, black_level_G1 = 16, black_level_R = 16;

13// Initialize the value for black level B, G0, G1, R as needed

14blackLevelCorrection.B = black_level_B;

15blackLevelCorrection.G0 = black_level_G0;

16blackLevelCorrection.G1 = black_level_G1;

17blackLevelCorrection.R = black_level_R;

18

19mfxExtBuffer *ExtBufferIn[2];

20ExtBufferIn[0] = (mfxExtBuffer *)&pipeControl;

21ExtBufferIn[1] = (mfxExtBuffer *)&blackLevelCorrection;

22

23mfxSession session = (mfxSession)0;

24mfxVideoParam VPPParams = {};

25VPPParams.NumExtParam = 2;

26VPPParams.ExtParam = (mfxExtBuffer **)&ExtBufferIn[0];

27MFXVideoVPP_Init(session, &VPPParams);

28#endif

Intel® VPL supports AI based super resolution with Intel HW. The following pseudo code shows how to perform AI based super resolution.

AI Powered Video Processing#

The following pseudo code shows AI super resolution video processing.

1// configure AI super resolution vpp filter

2mfxExtVPPAISuperResolution aiSuperResolution = {};

3aiSuperResolution.Header.BufferId = MFX_EXTBUFF_VPP_AI_SUPER_RESOLUTION;

4aiSuperResolution.Header.BufferSz = sizeof(mfxExtVPPAISuperResolution);

5aiSuperResolution.SRMode = MFX_AI_SUPER_RESOLUTION_MODE_DEFAULT;

6

7mfxExtBuffer * ExtParam[1] = { (mfxExtBuffer *)&aiSuperResolution };

8

9mfxSession session = (mfxSession)0;

10mfxVideoParam VPPParams = {};

11VPPParams.NumExtParam = 1;

12VPPParams.ExtParam = ExtParam;

13MFXVideoVPP_Init(session, &VPPParams);

The following pseudo code shows AI frame interpolation video processing.

1// configure AI frame interpolation vpp filter

2mfxExtVPPAIFrameInterpolation aiFrameInterpolation = {};

3aiFrameInterpolation.Header.BufferId = MFX_EXTBUFF_VPP_AI_FRAME_INTERPOLATION;

4aiFrameInterpolation.Header.BufferSz = sizeof(mfxExtVPPAIFrameInterpolation);

5aiFrameInterpolation.FIMode = MFX_AI_FRAME_INTERPOLATION_MODE_DEFAULT;

6aiFrameInterpolation.EnableScd = 1;

7mfxExtBuffer * ExtParam[1] = { (mfxExtBuffer *)&aiFrameInterpolation };

8

9init_param.NumExtParam = 1;

10init_param.ExtParam = ExtParam;

11init_param.vpp.In.FrameRateExtN = 30;

12init_param.vpp.In.FrameRateExtD = 1;

13init_param.vpp.Out.FrameRateExtN = 60;

14init_param.vpp.Out.FrameRateExtD = 1;

15sts = MFXVideoVPP_QueryIOSurf(session, &init_param, response);

16sts = MFXVideoVPP_Init(session, &init_param);

17

18// The below code follows the video processing procedure, not specific to AI frame interpolation.

19allocate_pool_of_surfaces(in_pool, response[0].NumFrameSuggested);

20allocate_pool_of_surfaces(out_pool, response[1].NumFrameSuggested);

21mfxFrameSurface1 *in=find_unlocked_surface_and_fill_content(in_pool);

22mfxFrameSurface1 *out=find_unlocked_surface_from_the_pool(out_pool);

23for (;;) {

24 sts=MFXVideoVPP_RunFrameVPPAsync(session,in,out,NULL,&syncp);

25 if (sts==MFX_ERR_MORE_SURFACE || sts==MFX_ERR_NONE) {

26 MFXVideoCORE_SyncOperation(session,syncp,INFINITE);

27 process_output_frame(out);

28 out=find_unlocked_surface_from_the_pool(out_pool);

29 }

30 if (sts==MFX_ERR_MORE_DATA && in==NULL)

31 break;

32 if (sts==MFX_ERR_NONE || sts==MFX_ERR_MORE_DATA) {

33 in=find_unlocked_surface_from_the_pool(in_pool);

34 fill_content_for_video_processing(in);

35 if (end_of_stream())

36 in=NULL;

37 }

38}

39MFXVideoVPP_Close(session);

40free_pool_of_surfaces(in_pool);

41free_pool_of_surfaces(out_pool);