Quick Get Started¶

Intel® Extension for TensorFlow* is a heterogeneous, high performance deep learning extension plugin based on TensorFlow PluggableDevice interface to bring Intel CPU or GPU devices into TensorFlow open source community for AI workload acceleration. It allows flexibly plugging an XPU into TensorFlow on-demand, and exposing computing power inside Intel’s hardware.

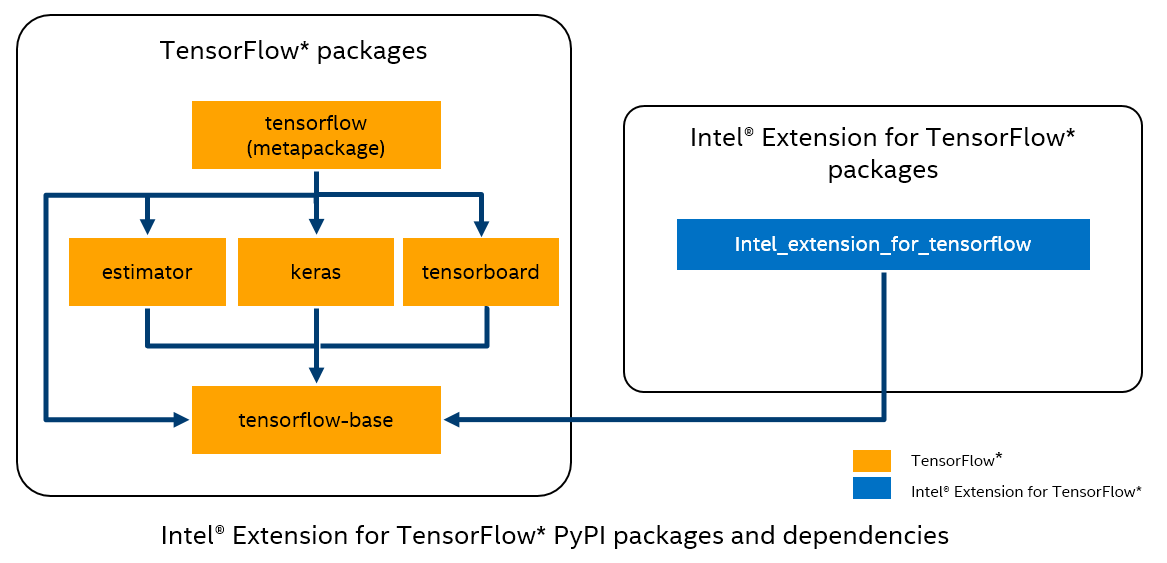

This diagram provides a summary of the TensorFlow* PyPI package ecosystem.

TensorFlow PyPI packages: estimator, keras, tensorboard, tensorflow-base

Intel® Extension for TensorFlow* package:

intel_extension_for_tensorflowcontains:XPU specific implementation

kernels & operators

graph optimizer

device runtime

XPU configuration management

XPU backend selection

Options turning on/off advanced features

Install¶

Hardware Requirement¶

Intel® Extension for TensorFlow* provides Intel GPU support and experimental Intel CPU support.

Software Requirement¶

| Package | CPU | GPU | Installation |

|---|---|---|---|

| Intel GPU driver | Y | Install Intel GPU driver | |

| Intel® oneAPI Base Toolkit | Y | Install Intel® oneAPI Base Toolkit | |

| TensorFlow | Y | Y | Install TensorFlow 2.12.0 |

Installation Channel:¶

Intel® Extension for TensorFlow* can be installed from the following channels:

DockerHub: GPU Container \ CPU Container

Source: Build from source

Compatibility Table¶

| Intel® Extension for TensorFlow* | Stock TensorFlow |

|---|---|

| v1.2.0 | 2.12 |

| v1.1.0 | 2.10 & 2.11 |

| v1.0.0 | 2.10 |

Install for GPU¶

pip install tensorflow==2.12

pip install intel-extension-for-tensorflow[gpu]==1.2.0

Refer to GPU installation for details.

Install for CPU [Experimental]¶

pip install tensorflow==2.12

pip install intel-extension-for-tensorflow[cpu]==1.2.0

Sanity check by:

python -c "import intel_extension_for_tensorflow as itex; print(itex.__version__)"

Resources¶

Support¶

Submit your questions, feature requests, and bug reports on the GitHub issues page.