PCIe Modeling Library

Simics PCIe modeling library supports up to PCI Express Base

Specification Revision 6.0. Some of the features it supports with

little or no modeling effort are:

- Sending and receiving PCIe Messages

- Upstream and downstream I/O and Memory operations

- Legacy PCI Interrupts

- Message Signalled Interrupts (MSI)

- Message Signalled Interrupts X (MSI-X)

- Resizable BARs (RBAR)

- Virtual Function Resizable BARs (VFRBAR)

- Single Root I/O Virtualization (SR-IOV)

- Address Translation Service (ATS)

PCIe is simulated in Simics on a functional level, which means that

models typically operate slightly above the Transaction Layer. Simics

does not simulate individual TLPs, it simulates relevant parts of the

TLP and conveys the information along the route to the target

destination.

A Transaction Layer Package (TLP) in PCIe comes in four different

types: Config, Memory, I/O, and Message. All of these are issued using

the transaction_t data structure. The various bits of information

required to complete an operation (PASID, RequesterID, etc.) are

attached to the transaction_t as atoms.

To create a PCIe endpoint:

- Import

"pcie/common.dml"

- Add the template

pcie_endpoint to the top level of your device code.

- Set

init_val for the registers device_id and vendor_id in the

pcie_config bank.

- If your endpoint defines optional capabilities: set the

init_val of

the capabilities_ptr register and add capability templates for the

capabilities present.

- Create one or several banks that implement the application logic

present in your endpoint. These will be mapped by the Base Address

Register(s) created in the next step.

- Add Base Address registers present in your endpoint, using either the

memory_base_address_64 or the memory_base_address_32 template.

To map in the application logic is done by setting the map_obj

parameter to the banks you created in the previous step.

Sizing the BAR is done by setting the size_bits parameter

in the Base Address Register.

Figure 1. Simple Endpoint

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4042; }

register bar0 @ 0x10 is memory_base_address_64 {

// 4k BAR allocation

param size_bits = 12;

param map_obj = app0.obj;

}

register bar2 @ 0x18 is memory_base_address_64 {

// 32 MB BAR allocation

param size_bits = 25;

param map_obj = app2.obj;

}

register capabilities_ptr {

// points to the base address of the first capability

param init_val = 0x40;

}

group ssid is ssid_capability {

param base = 0x40;

param next_ptr = 0x60;

register ssvid { param init_val = 0x8086; }

}

group msi is msi_capability {

param base = 0x60;

param next_ptr = 0x0;

param is_64bit_capable = true;

param is_pvm_capable = true;

param is_emd_capable = true;

param num_vectors = 2;

}

}

bank app0 {

// defines application logic tied to BAR0

register intr size 1 @ 0x0 is write {

method write(uint64 value) {

pcie_config.msi.raise(0); // raise MSI vector 0 on write

}

}

}

bank app2 {

// application logic tied to BAR2 goes here

}

To create a Multi-Function PCIe endpoint:

- Import

"pcie/common.dml"

- Add the template

pcie_multifunction_endpoint to the top level of your device code.

- Define one bank for each function in your endpoint, using the template

type_0_bank

- For each bank, apply the appropriate steps from section Endpoint

Figure 2. Simple Multi-Function Endpoint

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_multifunction_endpoint;

bank f0 is type_0_bank {

param function = 0;

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4042; }

register bar0 @ 0x10 is memory_base_address_64 {

// 32 MB BAR allocation

param size_bits = 25;

param map_obj = app0.obj;

}

register capabilities_ptr { param init_val = 0x40; }

group ssid is ssid_capability {

param base = 0x40;

param next_ptr = 0x60;

register ssvid { param init_val = 0x8086; }

}

group msi is msi_capability {

param base = 0x60;

param next_ptr = 0x0;

param is_64bit_capable = true;

param is_pvm_capable = true;

param is_emd_capable = true;

param num_vectors = 2;

}

}

bank f1 is type_0_bank {

param function = 1;

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4043; }

register bar0 @ 0x10 is memory_base_address_64 {

// 16 MB BAR allocation

param size_bits = 24;

param map_obj = app2.obj;

}

}

bank app0 {

// defines application logic tied to f0.BAR0

}

bank app2 {

// application logic tied to f1.BAR0 goes here

}

- Import

"pcie/common.dml"

- Create subdevices for your upstream and downstream ports, adding the template

pcie_downstream_port to the downstream ports and pcie_upstream_port to the

upstream port.

- Connect the downstream ports to the upstream port

- Set

init_val for the registers device_id and vendor_id in the

pcie_config bank of each subdevice.

- If your ports have additional capabilities: set

init_val of the

capabilities_ptr register and add capability templates for the

capabilities present.

- The prefetchable memory is by default 64-bit, one has to override

field

cap in registers bank_config.prefetchable.base and

bank_config.prefetchable.limit to make it 32-bit.

- If your switch implements application logic:

- Add e.g. banks that implement the registers that control this application

logic.

- Add Base Address registers using either the

memory_base_address_64

or the memory_base_address_32 template.

To map in the application logic is done by setting the map_obj

parameter to the banks you created in the previous step.

Sizing the BAR is done by setting the size_bits parameter

in the Base Address Register.

Here is a simple Switch example with one upstream port and four

downstream ports. The upstream port has MSI-X capability and built-in

application logic tied to BAR0:

Figure 3. Simple Switch

dml 1.4;

device pcie_switch;

import "pcie/common.dml";

subdevice usp "Upstream Port" {

is pcie_upstream_port;

bank pcie_config {

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4042; }

}

register bar0 @ 0x10 is memory_base_address_64 {

// 8 MB BAR allocation

param size_bits = 23;

param map_obj = app0.obj;

}

register capabilities_ptr { param init_val = 0x40; }

group msix is msix_capability {

param base = 0x40;

param next_ptr = 0x0;

param num_vectors = 32;

param table_offset_bir = 0x1000;

param pba_offset_bir = 0x5000;

param data_bank = msix_data;

}

}

subdevice dsp[i < 3] "Downstream Port" {

is pcie_downstream_port;

is post_init;

method post_init() {

// connect this port to the internal bus of the upstream port

// the second argument is the DeviceID, i.e. bus/device/function

// where the device-number is bits 7:3

pcie_device.connected(usp.downstream_port.obj, 1 << 3);

}

bank pcie_config {

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4043; }

}

}

bank app0 {

// application logic tied to BAR0 in the upstream port goes here

}

bank msix_data is msix_table; // storage for MSI-X table and pba

- Import

"pcie/common.dml"

- Apply the

pcie_bridge template to the top level of your device code.

- Create subdevices for your downstream ports, applying the template

pcie_root_port to all of them.

- Connect the downstream ports to the

downstream_port object created

by the pcie_bridge template.

- Set

init_val for the registers device_id and vendor_id in the

pcie_config bank of each downstream port.

- If your ports have additional capabilities: set

init_val of the

capabilities_ptr register and add capability templates for the

capabilities present.

- The prefetchable memory is by default 64-bit, one has to override

field

cap in registers bank_config.prefetchable.base and

bank_config.prefetchable.limit to make it 32-bit.

- If your Root Complex has integrated endpoints (RCiEPs) you can

implement these as subdevices in the RC, or as external devices. In

any case, they need to be connected to the

downstream_port of the

RC.

Here is an example root complex with one root port and two integrated

endpoints, one implemented directly in the code and one that is created

as a subobject, using another class:

Figure 4. Simple Root Complex

dml 1.4;

device root_complex;

param use_io_memory = true;

import "pcie/common.dml";

is pcie_bridge;

subdevice rp "PCIe Root Port" {

is pcie_root_port;

is post_init;

method post_init() {

// connect this port to the internal bus of the RC

// the second argument is the DeviceID, i.e. bus/device/function

// where the device-number is bits 7:3

pcie_device.connected(dev.downstream_port.obj, 0);

}

bank pcie_config {

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4043; }

register class_code { param init_val = 0x20000; }

}

}

subdevice iep_A "Integrated Endpoint A" {

is pcie_endpoint;

is hreset;

is post_init;

method post_init() {

// connect this integrated endpoint to the internal bus of the RC

// the second argument is the DeviceID, i.e. bus/device/function

// where the device-number is bits 7:3

pcie_device.connected(dev.downstream_port.obj, 1 << 3);

}

bank pcie_config {

register vendor_id { param init_val = 0x8086; }

register device_id { param init_val = 0x4044; }

register bar0 @ 0x10 is memory_base_address_64 {

param size_bits = 12;

param map_obj = app0.obj;

}

register capabilities_ptr { param init_val = 0x40; }

group msix is msix_capability {

param base = 0x40;

param next_ptr = 0x0;

param num_vectors = 32;

param table_offset_bir = 0x1000;

param pba_offset_bir = 0x5000;

param data_bank = msix_data;

}

}

bank app0 {

// application logic tied to BAR0 in the integrated endpoint goes here

}

bank msix_data is msix_table; // storage for MSI-X table and pba

}

connect iep_B "Integrated Endpoint B"{

is post_init;

is init_as_subobj;

param classname = "some_class";

interface pcie_device;

method post_init() {

// connect this integrated endpoint to the internal bus of the RC

// the second argument is the DeviceID, i.e. bus/device/function

// where the device-number is bits 7:3

pcie_device.connected(dev.downstream_port.obj, 2 << 3);

}

}

There are some sample PCIe devices distributed in Simics base. Currently, this

includes sample-pcie-device, which is a simple PCIe device with some

capabilities and a memory BAR mapped register bank. There is also the

standard-pcie-switch, which also has a component wrapper

standard-pcie-switch-comp. It's a switch with 4 downstream ports. All ports in

implement 3 capabilities. This switch can be used in any platform that supports

PCIe to provide the ability to expand the PCIe hierarchy.

This is a high-level description of how PCIe is implemented in

Simics. If you write your device in DML, most of the details below are

automatically handled by the DML templates.

The Simics PCIe modeling framework provides the ability to model

- Endpoints

- Multifunction Endpoints

- Root Complexes

- Switches

- Bridges

A PCIe device must implement the pcie_device interface. This

interface is used to indicate when the device is connected,

disconnected, and to signal a hot reset.

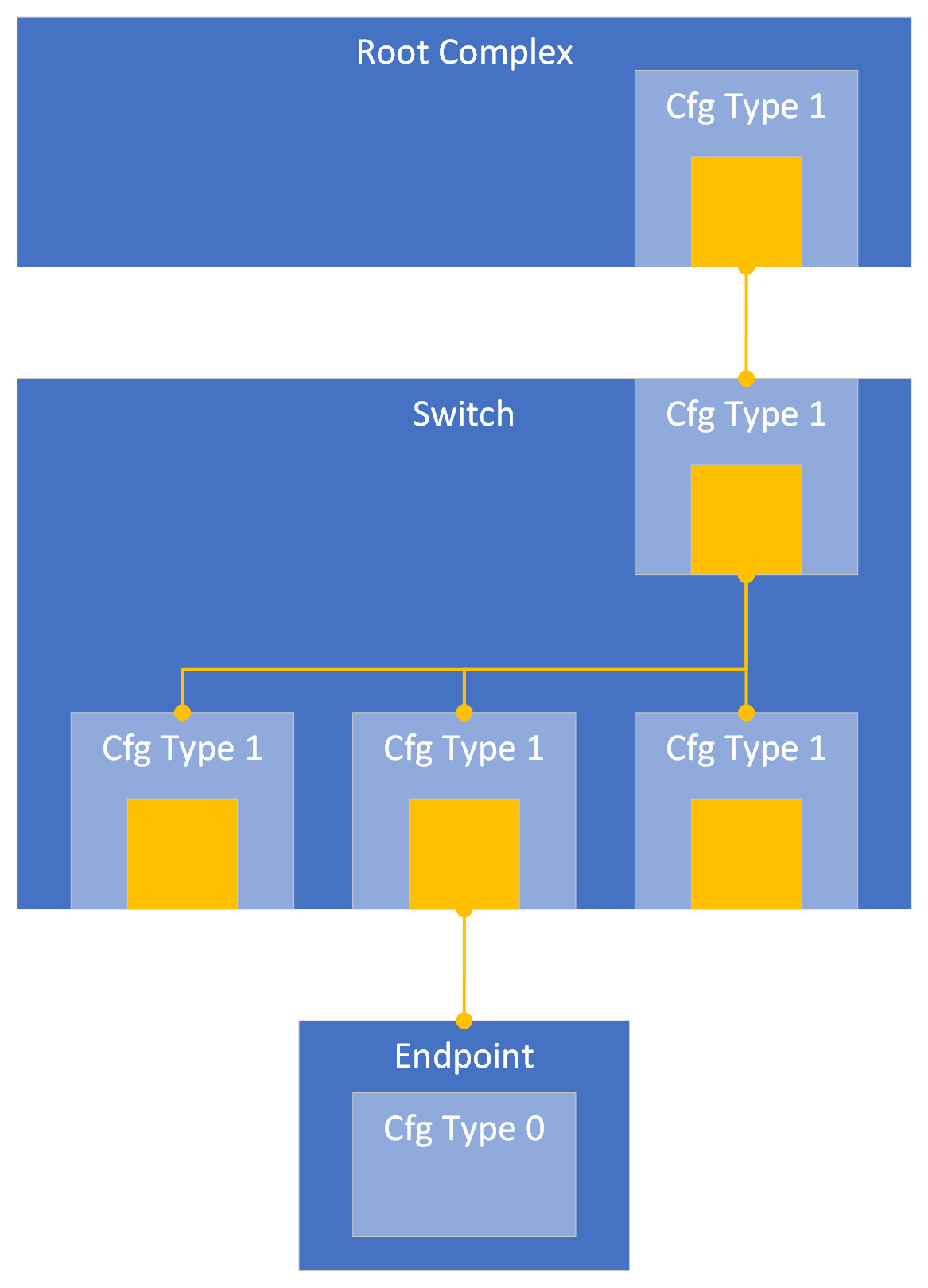

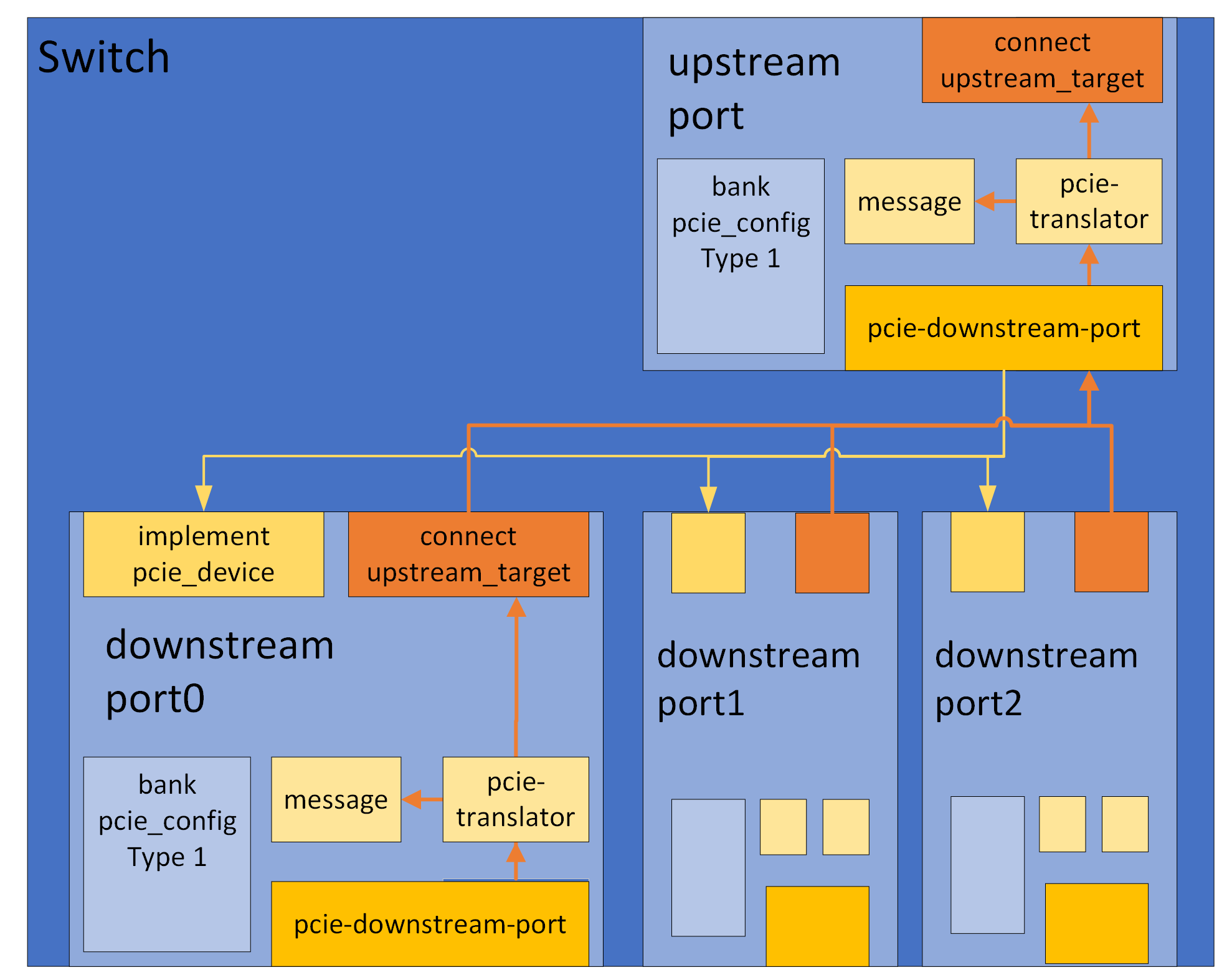

An RC, Switch or other bridges, must use helper-objects of the class

pcie-downstream-port to simulate the downstream port(s). Each port

facing downstream, i.e. each Virtual PCIe-PCIe bridge in the RC or

Switch (represented by a Type 1 Configuration Header), should have a

pcie-downstream-port. Each downstream port can connect to one or

several (external) PCIe devices. The upstream-facing port of a Switch

should have a pcie-downstream-port with the (internal) Virtual

PCIe-PCIe bridge(s) connected as devices. The below image illustrates

a sample PCIe hierarchy in Simics, yellow boxes represent

pcie-downstream-ports.

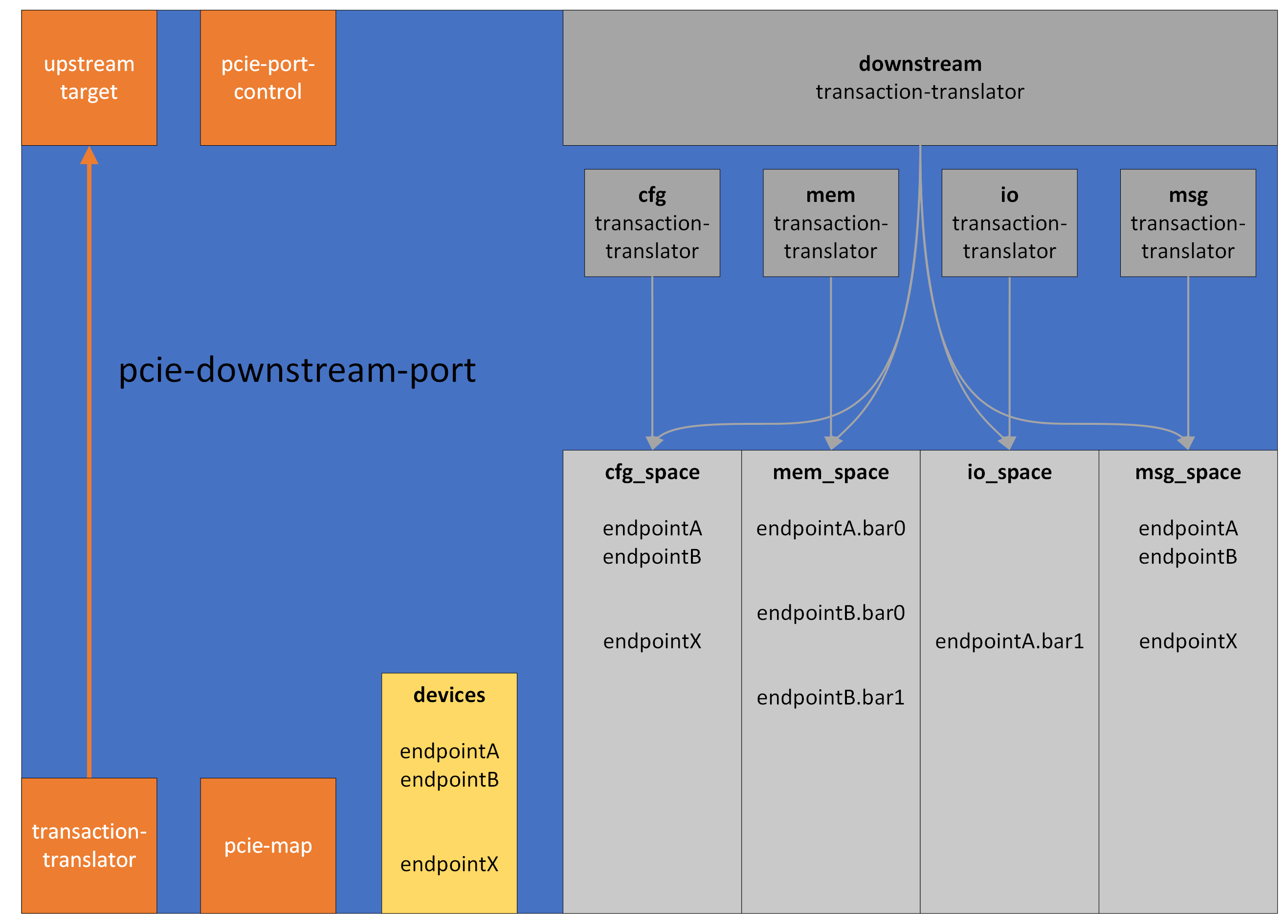

The pcie-downstream-port routes messages and manages the Config, IO

and Memory address spaces for its connected downstream devices. There

is a translator-port downstream which receives downstream

transactions and redirects them to the downstream devices connected to

it. There are also specialized translator-ports cfg, msg, io,

and mem which can be used to send transactions of their specific type.

The interface pcie_port_control is implemented by the

pcie-downstream-port, it is used by whoever owns the port to configure

it, and to signal hot reset. The pcie-downstream-port also implements

the pcie_map interface, which the Endpoints below it use to claim

ranges in the downstream address space (e.g. Memory and I/O BARs), and

add (virtual) functions. An endpoint starts an upstream transaction by

issuing it to its connected pcie-downstream-port

Endpoints issue upstream transactions through the pcie-downstream-port

object of the RC/Switch to which they are connected. The

pcie-downstream-port acts as a translator and will direct all upstream

transactions to its upstream_target, typically the host memory in

case of an RC, or the upstream target in case of a Switch.

Endpoints must add their functions and map other resources such as

Memory and I/O BARs (as configured in the relevant registers of the

Type 0 Configuration Header). This is done through the pcie_map

interface of the pcie-downstream-port object to which they are

connected.

Switches have an upstream port which is connected to the

pcie-downstream-port of either a Root Complex or another Switch. As

the downstream port(s) of the Switch are configured, they must map the

resources of these ports in the pcie-downstream-port of the RC/Switch

to which they are connected. For example, the Message and

Configuration range bounded by secondary and subordinate bus

registers, as well as the Memory and IO ranges bounded by base and

limit registers.

Simics provides a set of DML templates to assist in writing models for

PCIe compliant devices. The templates are available in

[simics]/src/devices/dml-lib/pcie/.

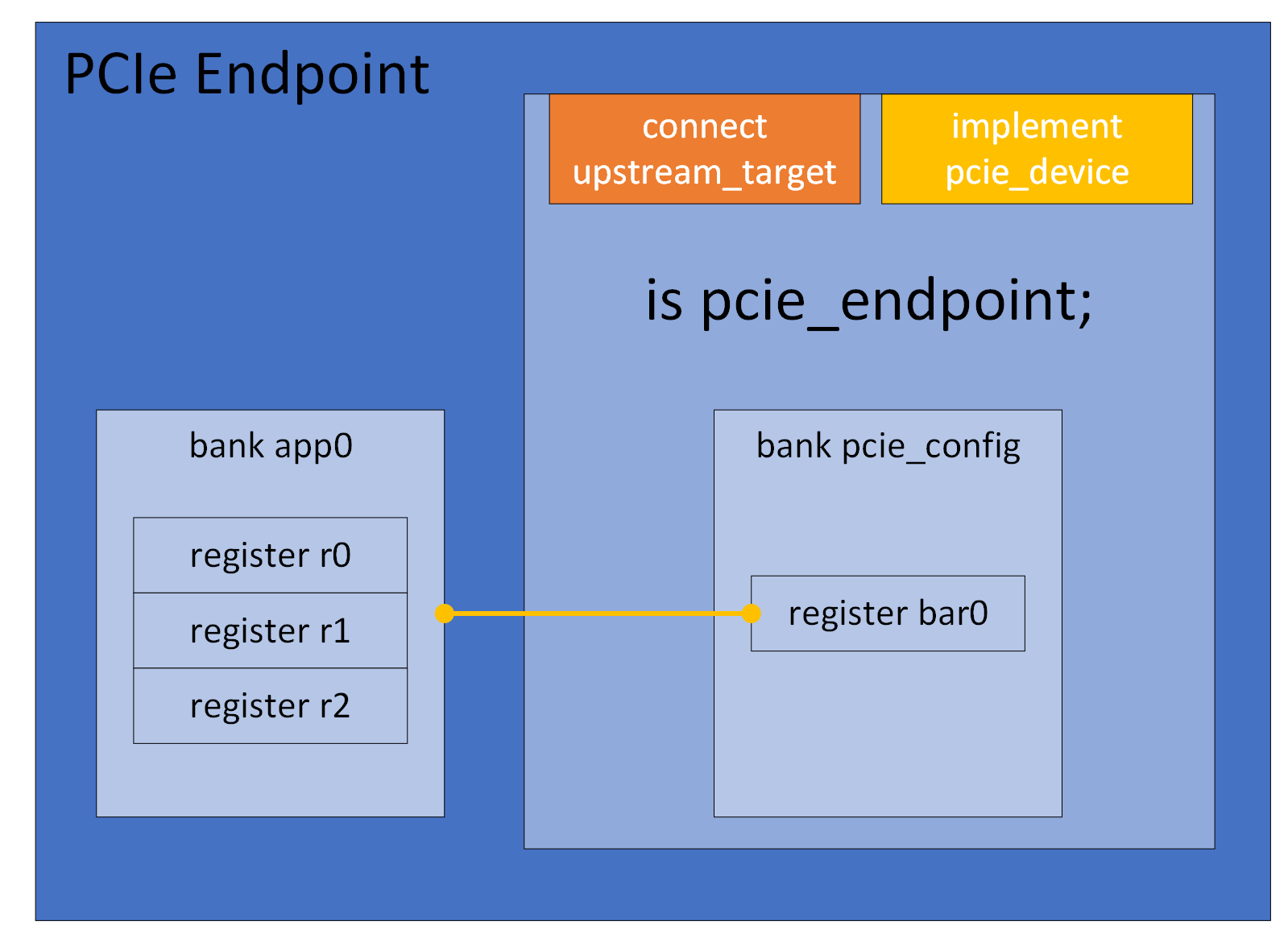

A typical endpoint device would use the pcie_endpoint

template. This template defines the pcie_config register bank which

simulates a Type 0 Configuration header. It also defines a connect for

the upstream target, implements the required interfaces, and handles the

mapping of resources defined in any base address registers.

The configuration header of a PCIe device is a register bank,

typically named pcie_config, which uses the template

physical_config_bank. A register bank would normally

not instantiate this template directly, but use either of

type_0_bank or type_1_bank instead. An

endpoint that uses the pcie_endpoint template automatically gets a

bank pcie_config which is an instance of the

type_0_bank template. All instances of

physical_config_bank will be mapped in the

configuration space of the upstream port when the device is connected,

and all base address registers in the bank will be mapped in the

appropriate address space, according to the type. If more than one

instance of physical_config_bank exists in the same device,

i.e. when simulating a multi-function-device, they must be separated

by assigning different values to the parameter function. Sample code

for a simple multi-function endpoint is available in the quick-start

Multi-Function Endpoint section.

The pcie_config bank defines the registers vendor_id, device_id

and class_code. An endpoint must assign init values for these,

according to the specification of the hardware that is to be

simulated. Sample code for setting Vendor ID, Device ID and Class Code

is available in the quick-start Endpoint section.

Depending on the device to be modeled, the init value for other

registers might also need to be customized.

PCIe defines optional "Capabilities" and "Extended

Capabilities". These are available as templates, configurable by

parameters. The templates are designed to be applied on groups, and

all templates require the parameters base and next_ptr to be

defined. The base parameter defines the address of the first

register in the capability structure. The next_ptr defines the base

address of the first address in the next capability structure (or zero

if this is the last capability). For example, the

Endpoint in the quick-start section has the

Subsystem ID (SSID) and Message Signaled Interrupts (MSI) capabilities

defined

Note that except where explicitly noted, the capability templates just

define the registers and fields from the PCIe specification. The

actual functionality must then be implemented by the device code. See

DML Template Reference for more details.

An endpoint typically defines at least one base address register. In

Simics these are declared by creating registers in the bank that

corresponds to the configuration header (typically pcie_config).

The base address registers must use one of the base address templates,

for example the memory_base_address_64. The

Endpoint in the quick-start section defines two

Memory Base Address registers, bar0 and bar2. Each of them is tied

to a register bank that will be mapped when the Memory Space Enable

bit in the Command register is written as '1'.

There are a number of different templates that can be used to simulate

base address registers, and they can be customized using various

parameters. These are described in the Common

Templates section of this document.

PCIe functions can send legacy interrupts using the

raise_legacy_interrupt and lower_legacy_interrupt methods in the

pcie_config bank. If the function has an MSI and/or MSI-X capability, it

can use the appropriate Capabilities template to implement this and

send message signalled interrupts by using the raise method in the

group using the msi_capability or msix_capability template. The

Endpoint in the quick-start section, for example,

has MSI capability and raises MSI vector 0 when the intr register in app0 is

written. If a function has both an MSI and an MSI-X capability, it's important

to either call the raise() method on both capabilities (if the capability is

disabled, it is a no-op), or use the enabled() method on both capabilities to

check which one is enabled before calling the applicable raise() method.

MSI-X is less straight forward compared to MSI. With MSI-X, there is the MSI-X

Table and the MSI-X PBA structure that both reside in BAR mapped memory space.

The library is able to handle all of this accordingly, but there is more

configuration needed compared to MSI where its simple to just instantiate the

MSI capability template and be done with it.

dml 1.4;

device msix_example_1;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

register capabilities_ptr { param init_val = 0x40; }

// bar is a BAR register at position 1 since it is at offset 0x14

register bar @ 0x14 is (memory_base_address_64) { param size_bits = 12; }

is defining_msix_capability;

param msix_offset = 0x40;

param msix_next_ptr = 0;

param msix_num_vectors = 32;

// The Table and PBA params contains the BIR value at the 3 lower bits and

// the offset at the remaining 29 bits.

param msix_table_offset_bir = 1;

param msix_pba_offset_bir = ((0x10 * msix_num_vectors) << 3) | 1;

param msix_data_bank = msix_data;

}

// Internal representation of the MSI-X state

bank msix_data is msix_table {

param msix_bank = pcie_config;

}

Here we have an endpoint with one function that contains an MSI-X capability.

We have set the msix_table_offset_bir param to 1.

This param represents the values Table BIR and Table Offset in the MSI-X capability structure.

Since Table BIR becomes 1, and Table Offset also becomes 0, the MSI-X Table will end up in the memory region mapped by bar (which is at position 1) at offset 0.

With msix_pba_offset_bir set to ((0x10 * msix_num_vectors) << 3) | 1 and (0x10 * msix_num_vectors) being the size of the MSI-X Table, the PBA BIR is also 1.

However, the offset makes the PBA start just after where the Table has ended (in the same memory region).

A few notes:

- The content of the

msix_data, which instantiates msix_table, should not

need any consideration by the user, since this is a library implementation

detail.

- An MSI-X interrupt would in this case have been raised using

pcie_config.msix.raise(<vector>).

- There must exist a BAR register definition in the bank where the

msix_capability is instantiated on an offset which is in line with the BIR

selection for both the Table and the PBA.

- The MSI-X Table and PBA must not necessarily reside on the same BAR mapped

memory-region.

- It is allowed for MSI-X Table and PBA to utilize an already occupied BAR as

per the PCIe specification. The library however only supports that data (which

is mapped by setting the

map_obj param in the BAR register definition) would

start at offset 0 of memory-region mapped by the BAR register. The MSI-X Table

and PBA can have any offset (as long as it does not overlap with valid access

points in the map_obj).

Simics PCIe uses the transaction_t data type for all

transactions. The config_bank template provides utility

methods for reading and writing to the PCIe memory space. These

methods reside in the group memory and operate on the

upstream_target. Details are available in the

Memory methods section of this document. Below is a

sample DML device which defines a method that reads 8 bytes from PCIe

memory and writes it back with all bits flipped.

Figure 5. Example memory reading and writing

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

method process_data(uint64 address) {

local (pcie_error_t err, uint64 value) = pcie_config.memory.read(addr, 8);

if (err != PCIE_Error_No_Error) {

log error: "failed to read PCIe memory @ 0x%x", address;

return;

}

err = pcie_config.memory.write(addr, ~value, 8);

if (err != PCIE_Error_No_Error)

log error: "failed to write PCIe memory @ 0x%x", address;

}

Just like for memory transactions, the config_bank

template defines a group message with utility methods for sending

and receiving messages. By default, the methods for receiving just log

an "unimpl" string and return false, indicating that the device did

not accept the message. Device code must override the methods for the

messages it wishes to service, and return true if the message is

accepted. As with the memory group, the methods for sending messages

operate on upstream_target.

Here is a sample DML device which accepts 'Vendor Defined Type 0'

messages and sends a 'Vendor Defined Type 1' message upstream, with

the address bits inverted. The available methods are described in more

detail in the Sending and

Receiving Messages sections.

Figure 6. Example sending and receiving messages

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

// ...

group message {

method vendor_defined_type_0(transaction_t *t, uint64 addr) -> (bool) {

log info, 2: "VDM Type 0 received, address: 0x%x", addr;

local pcie_error_t err = message.send(

~addr, PCIE_Vendor_Defined_Type_1, PCIE_Msg_Route_Upstream);

return err == PCIE_Error_No_Error;

}

}

// ..

}

PCIe defines two types of resets: Conventional Reset and FLR (Function Level Reset)

Conventional Reset is divided into three categories: Cold, Warm and Hot

Cold reset in the PCIe library is the same as instantiating the PCIe device in Simics.

Warm reset is modelled in the library.

Hot reset is defined in the PCIe specification and is modelled. FLR is also modelled in the library.

Warm reset is modelled with the hard_reset templates and a HRESET signal port exists

trigger a warm reset externally. All registers go back to their initial state.

Hot reset is modelled with the soft_reset templates, (defined in DML Utilities), in the library.

In PCIe Hot reset is triggered by an inband signal.

In Simics the inband signal is modelled through the pcie_device

interface method hot_reset. When an upstream port triggers

this method a soft_reset is invoked on the PCIe device, resetting

each PCIe function below the object implementing the pcie_device

interface. Register fields inheriting template pcie_sticky

are not affected by a Hot reset. The library adds the template pcie_sticky

to all fields defined as sticky in the specification.

FLR only applies to Endpoints and is modelled with the pcie_function_level_reset templates

in the library. FLR operates on function level.

Firmware can reset individual functions in a multi function device by invoking FLR.

Each type_0_bank inherits template pcie_function_level_reset.

Firmware invokes an FLR by writing 1 to field bcre_iflr in the

Device Control Register or to field iflr in the Control register in the PCI AF capability.

An FLR is initiated by invoking method pcie_function_level_reset on the functions PCIe config bank.

It causes all FLR relevant fields to reset to their initial state and resets relevant states

within the PCIe device.

To add custom FLR logic to a model the user has to override the pcie_function_level_reset method

in the bank.

Figure 7. Example FLR implementation

dml 1.4;

device endpoint;

import "pcie/common.dml";

saved int my_function_state;

is pcie_endpoint;

bank pcie_config {

register capabilities_ptr {

param init_val = 0x40;

}

is defining_exp_capability;

param exp_offset = capabilities_ptr.init_val;

param exp_next_ptr = 0x0;

param exp_dp_type = PCIE_DP_Type_EP;

method pcie_function_level_reset() {

default();

log info, 2: "Resetting function";

my_function_state = 0;

}

}

To add custom reset logic that needs to be run for all PCIe resets: Warm, Hot and FLR,

one should utilize the pcie_after_reset method at the top of the PCIe config bank. It

will by default be invoked by all PCIe reset types.

Figure 8. Example adding custom reset logic invoked by all PCIe reset types.

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

saved int state;

method pcie_after_reset() {

default();

log info, 2: "Resetting model";

state = 0;

}

}

The pcie_config bank has a parameter use_standard_access_templates that

defaults to true. When this parameter is set to true,

the library automatically adds templates read_only, hwinit and ignore_write

to registers and fields according to PCIe specification.

The hwinit template by default makes the field read-only. See

DML Template Reference for more details for the hwinit template.

The actual implementation of HwInit in vendor hardware implementations

varies because the PCIe specification states that the Hwinit behaviour is vendor

defined to a large extent. Therefore the HwInit behaviour in the lib can be tailored

to accommodate vendor functionality.

Here are a few examples of HwInit hardware behaviour which the library can model:

Figure 9. Example to allow firmware to write once to each hwinit field after hard reset or instantiation

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

in each (hwinit) {

param writable_after_hreset = true;

}

}

Figure 10. Example where input signal controls writable access to hwinit fields

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

in each (hwinit) {

param writable_once_only = false;

}

}

port hwinit_control_signal is signal_port {

implement signal {

method signal_raise() {

default();

pcie_config.unlock_hwinit_registers();

}

method signal_lower() {

default();

pcie_config.lock_hwinit_registers();

}

}

}

Figure 11. Example to disable hwinit behaviour entirely

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

in each (hwinit) {

param writable_after_hreset = true;

param writable_once_only = false;

}

}

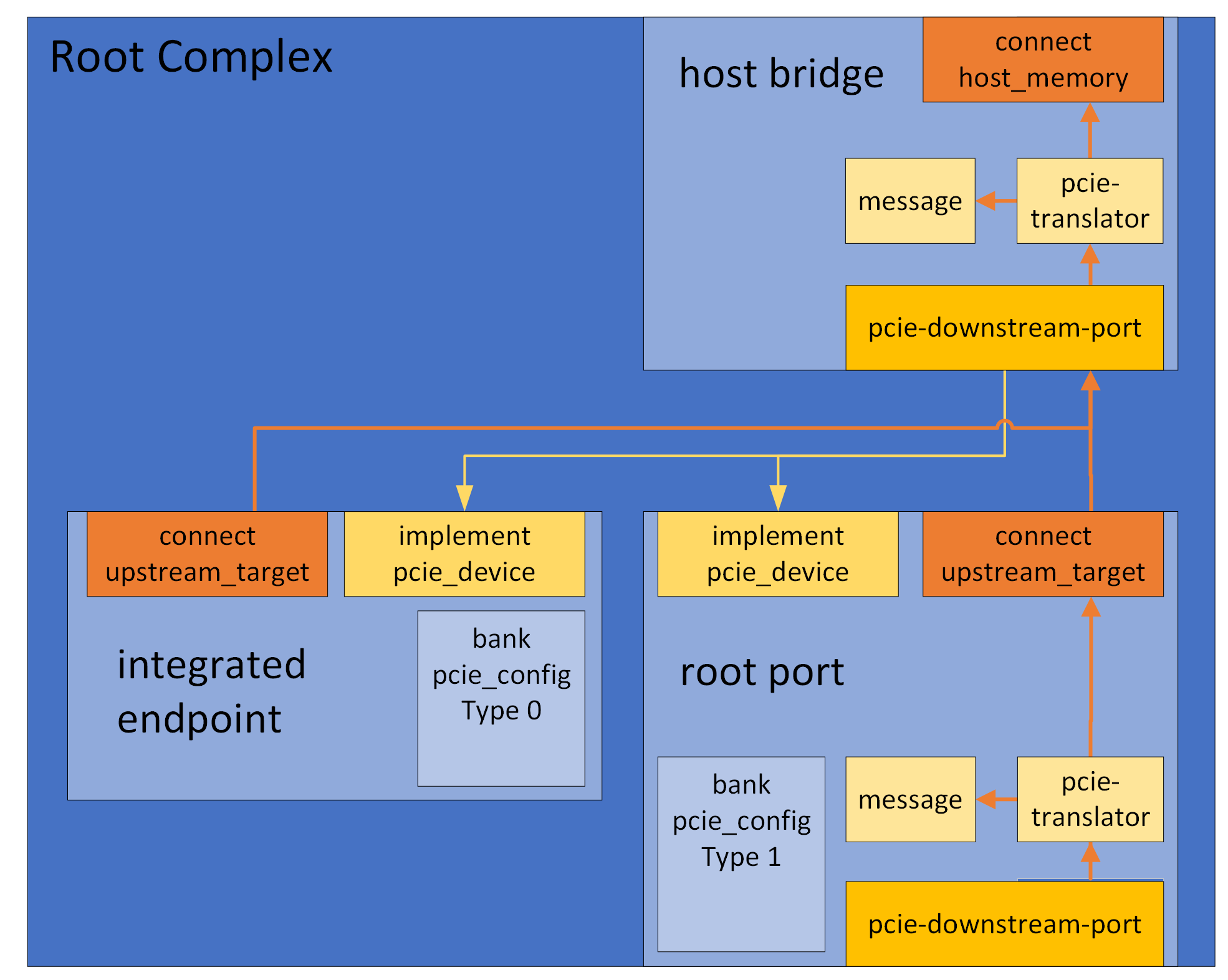

A PCIe device that is not an endpoint, i.e. a Root Port or a a Switch

Port, is simulated with the help of an object of the class

pcie-downstream-port.

A root or switch port would typically use one of the the

pcie_root_port, pcie_downstream_port or

pcie_upstream_port templates (an object that instantiates one of

these templates will from now be referenced as a PCIe port). These PCIe port

templates create a port object downstream_port of the class

pcie-downstream-port and defines a bank pcie_config which is an instance of

the type_1_bank template. It also defines a connect to an

upstream target and provides default implementations for the interface

transaction_translator to handle upstream transactions.

The type_1_bank template automatically handles the

standard base address registers for IO, Memory, and Prefetchable memory.

It maps the configured ranges in the appropriate address space of the

connected upstream target, forwarding them to its downstream

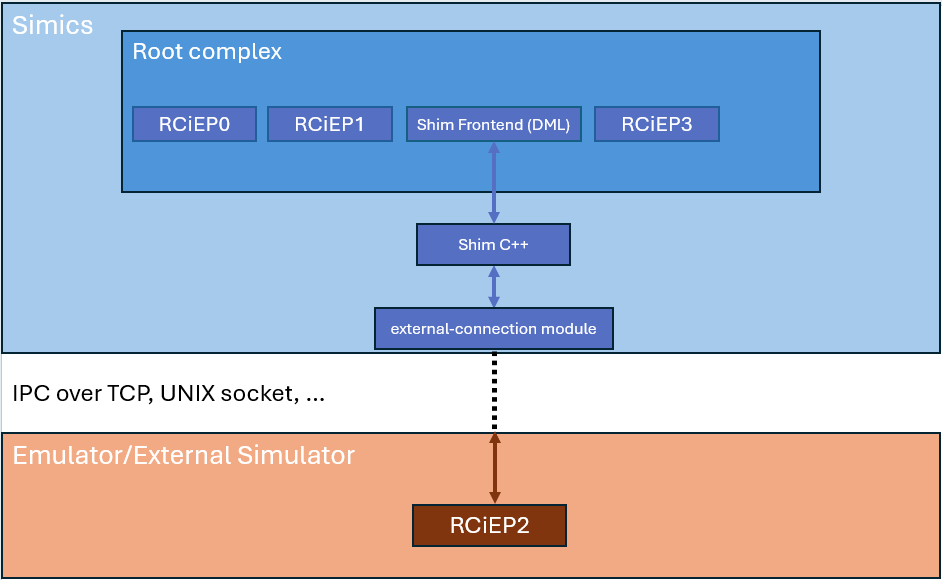

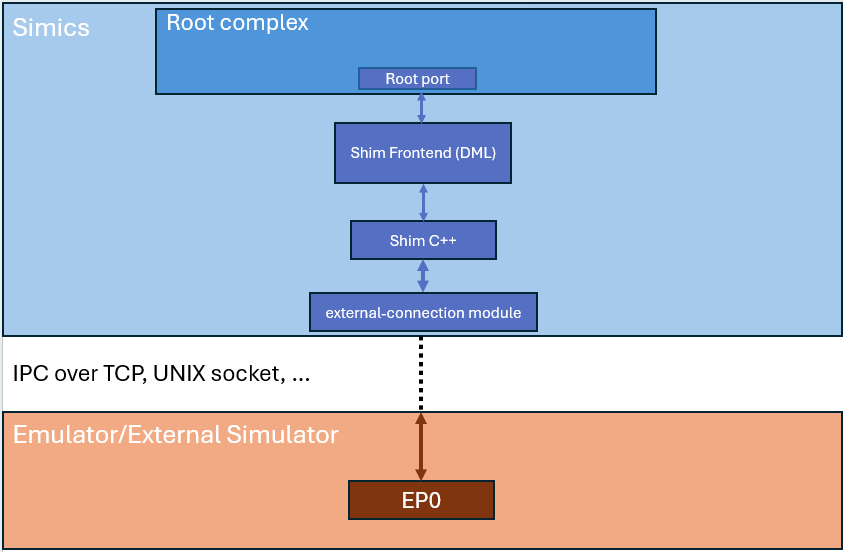

port. Here is an overview image of a sample RC with one root port and

one Root Complex Integrated Endpoint (RCiEP)

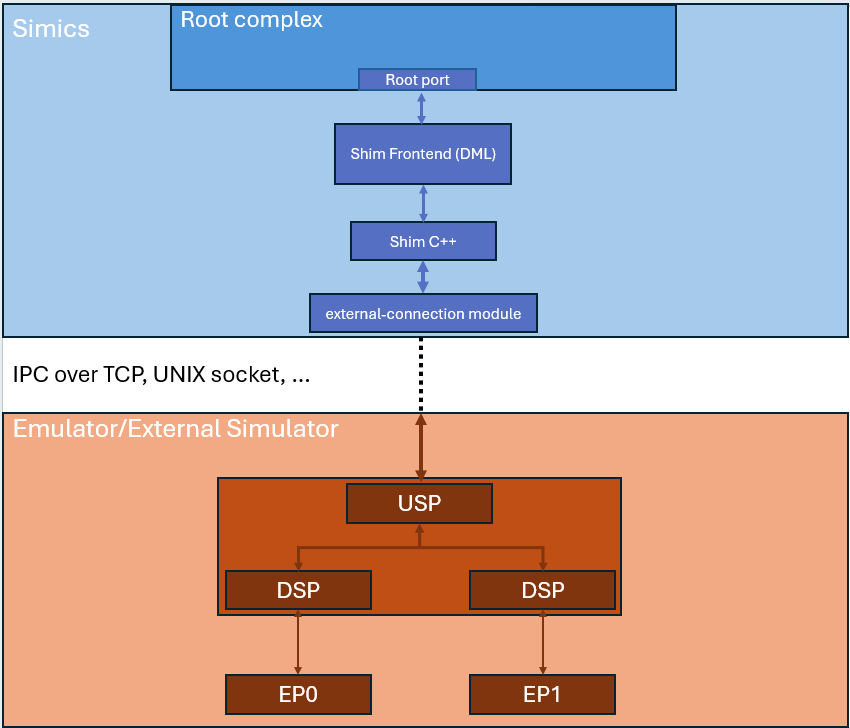

And here is an overview image of a sample Switch with one upstream and

three downstream ports.

The quick-start section contains sample code for creating a similar

Root Complex and Switch

A PCIe port by default automatically forwards all downstream traffic to its

pcie-downstream-port helper object. This is done in the applicable

transaction_translators implemented in ports found in the

downstream_translator group of the PCIe port. There is one port for each

PCIe transaction type. The transaction_translators by default forward each

incoming transaction to the PCIe port's downstream_port helper object. By

overriding these transaction_translators, a PCIe port can dynamically redirect

downstream traffic, apply custom access restrictions or add atoms to a

downstream transaction. See the

section about adding atoms to a

transaction if applicable. See the documentation for the

pcie_downstream_translator for more information.

Adding atoms to a transaction by chaining multiple transactions needs to be

handled with care if the object that adds the atom does not want break support

for deferred transactions in a platform that supports it. More information

regarding this topic can be found

here,

which is an important read to understand the example below.

In the example below, the transaction_translator interface of the mem port

in the downstream_translators group in an object that has instantiated the

pcie_root_port template has been overridden. Here, if the transaction has an

address of 0x3cc00004, the pcie_ecs atom is added to the transaction by

chaining transactions. Note that to maintain support for checkpointing in the

presence of deferred transactions, the value of the pcie_ecs atom along with

the transaction id is stored in an attribute chained_transactions. It also has

to been ensured that when the checkpoint is read, the deferred transactions are

restored, as seen in the set() method of the attribute.

group downstream_translators {

port mem {

implement transaction_translator {

method translate(uint64 addr,

access_t access,

transaction_t *prev,

exception_type_t (*callback)(translation_t txl,

transaction_t *tx,

cbdata_call_t cbd),

cbdata_register_t cbdata) -> (exception_type_t) {

local translation_t txl = { .target = target, ... };

local transaction_t t;

local int num_atoms = (addr == 0x3cc00004) ? 4 : 3;

local atom_t atoms[(addr == 0x3cc00004) ? 4 : 3];

atoms[0] = ATOM_owner(dev.obj);

atoms[1] = ATOM_completion(&empty_completion);

if (addr == 0x3cc00004) {

atoms[2] = ATOM_pcie_ecs(PCIE_ECS_SIG_OS);

atoms[3] = ATOM_list_end(0);

} else {

atoms[2] = ATOM_list_end(0);

}

t.atoms = atoms;

t.prev = prev;

local exception_type_t exc = callback(txl, &t, cbdata);

if (exc == Sim_PE_Deferred) {

local transaction_t *new_ct = new transaction_t;

local atom_t *new_atoms = new atom_t[4];

new_ct->atoms = new_atoms;

memcpy(new_ct->atoms, t.atoms, sizeof(atoms));

new_ct->atoms[1] = ATOM_completion(&completion);

new_ct->prev = t.prev;

SIM_replace_transaction(&t, new_ct);

VADD(chained_transactions.val, new_ct);

return SIM_monitor_chained_transaction(new_ct, exc);

} else {

// Cannot return exc directly here. If the parent transaction_t

// has a completion atom that it expects to be called,

// it will only be called if SIM_monitor_chained_transaction

// is called by this transaction.

return SIM_monitor_chained_transaction(&t, exc);

}

}

}

}

}

method empty_completion(transaction_t *t, exception_type_t e) ->

(exception_type_t) {

return e;

}

method completion(transaction_t *t, exception_type_t e) ->

(exception_type_t) {;

VREMOVE_FIRST_MATCH(chained_transactions.val, t);

delete t->atoms;

delete t;

return e;

}

attribute chained_transactions is destroy {

param type = "[[ii]*]";

session transaction_t * vect val;

param internal = true;

method set(attr_value_t value) throws {

if (!SIM_is_restoring_state(obj)) {

SIM_attribute_error("must only be set during checkpoint restore");

throw;

}

for (local int i = 0; i < VLEN(this.val); i++)

delete this.val[i];

VFREE(this.val);

for (local int i = 0; i < SIM_attr_list_size(value); i++) {

local attr_value_t li = SIM_attr_list_item(value, i);

local int64 id = SIM_attr_integer(SIM_attr_list_item(li, 0));

local pcie_ecs_t ecs = SIM_attr_integer(SIM_attr_list_item(li, 1));

local transaction_t *t = new transaction_t;

local atom_t *atoms = new atom_t[ecs != PCIE_ECS_Legacy ? 4 : 3];

t->atoms = atoms;

atoms[0] = ATOM_owner(dev.obj);

atoms[1] = ATOM_completion(&completion);

if (ecs != PCIE_ECS_Legacy) {

atoms[2] = ATOM_pcie_ecs(ecs);

atoms[3] = ATOM_list_end(0);

}

else {

atoms[2] = ATOM_list_end(0);

}

VADD(this.val, t);

SIM_reconnect_transaction(t, id);

}

}

method get() -> (attr_value_t) {

local attr_value_t value = SIM_alloc_attr_list(VLEN(this.val));

local transaction_t t;

for (local int i = 0; i < VLEN(this.val); i++) {

// connect the atom-list to an empty transaction, to avoid finding

// atoms in chained 'prev'

t.atoms = this.val[i]->atoms;

local attr_value_t li = SIM_make_attr_list(

2,

SIM_make_attr_uint64(SIM_get_transaction_id(this.val[i])),

SIM_make_attr_uint64(ATOM_get_transaction_pcie_ecs(&t)));

SIM_attr_list_set_item(&value, i, li);

}

return value;

}

method destroy() {

// Deferred transactions are completed by Simics Core, we just need to

// free the vect

VFREE(this.val);

}

}

A PCIe port by default automatically forwards all upstream traffic to its

upstream_target. A PCIe port that wishes to change that can either redirect

traffic of a certain type by setting any or all of the parameters def, msg,

mem, io, and cfg in the txl_target group (of the object that

instantiated any of the PCIe port templates) to a valid map target. Setting it

to NULL will block upstream traffic of that type. If a PCIe port wants to

dynamically redirect upstream traffic, the transaction_translator

implementation that is implemented in the PCIe port can instead be be

overridden. See the documentation for the

pcie_upstream_translator template for more information.

Messages can be handled by creating instances of the template

handling_messages in the upstream_message port. This port is

created automatically by the pcie_root_port template. See the

documentation for the handling_messages template for more

information. Here is an example that handles Vendor Defined Message

Type 0:

Figure 12. Example upstream message handling

dml 1.4;

device rp;

import "pcie/common.dml";

is pcie_root_port;

port upstream_message {

group vdm0 is handling_messages {

method message(transaction_t *t, uint64 addr,

pcie_message_type_t type) -> (pcie_error_t) {

if (type != PCIE_Vendor_Defined_Type_0) {

// message not handled here

return PCIE_Error_Not_Set;

}

log info: "VDM0 received";

return PCIE_Error_No_Error;

}

}

}

A device that wishes to bridge PCIe to/from host memory, without

necessarily being a Type 1 device, would use the pcie_bridge

template. Like pcie_root_port, the template creates a port object

downstream_port but it doesn't create any register bank and instead

of an upstream_target it has a connect host_memory to which it

translates requests.

Segment routing across PCIe hierarchies is supported in PCIe 6 and

the PCIe modeling library provides templates and methods to support it.

Segment routing consists of two parts:

- Configuring the segment number for each PCIe hierarchy within a Root Complex.

- Route messages upstream if destination segment does not match the source segment number.

The first part requires as per PCIe 6 specification that configuration requests

contain the segment number for the hierarchy. It is up to the root complex

to append ATOM_transaction_pcie_destination_segment atom to downstream

configuration requests. The PCIe library will capture this atom and store

its value internally. This is true for Root Ports, Switches and Endpoints.

For segment routing to work all relevant devices in the hierarchy must instantiate

the dev3_capability capability. For instance if an endpoint wants to route a message

to a target that is part of another PCIe hierarchy all upstream ports connecting

the endpoints to the Root Complex must have the dev3_capability instantiated.

The second part is handled automatically within the PCIe library up until the Root Complex.

But first the message initiator must setup the message transfer utilizing

the send_custom method. More details in the Sending

Message section.

Figure 13. Example sending message upstream with segment number

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

// ...

is defining_dev3_capability;

param dev3_offset = 0x100;

param dev3_next_ptr = dev3_offset + 0x100;

// ..

method send_message(uint16 target_id, uint8 segment_number) {

local atom_t extra_atoms[2];

extra_atoms[0] = ATOM_pcie_destination_segment(segment_number);

extra_atoms[1] = ATOM_list_end(0);

local bytes_t data;

local pcie_error_t ret = message.send_custom(target_id << 48,

PCIE_Vendor_Defined_Type_0,

PCIE_Msg_Route_ID,

data,

extra_atoms);

}

}

Figure 14. Example of root complex with multiple PCIe segments supporting message routing across segments.

dml 1.4;

device root_complex;

import "utility.dml";

import "pcie/common.dml";

param pcie_version = 6.0;

param nbr_segments = 4;

group segment[segment_id < nbr_segments] {

subdevice bridge is pcie_bridge {

group txl_target {

param msg = dev.upstream_messages.map_target;

}

}

subdevice root_port is (pcie_root_port, post_init) {

bank pcie_config {

register capabilities_ptr {

param init_val = 0x40;

}

is defining_pm_capability;

param pm_offset = capabilities_ptr.init_val;

param pm_next_ptr = pm_offset + 0x10;

is defining_exp_capability;

param exp_offset = pm_next_ptr;

param exp_next_ptr = exp_offset + 0x30;

param exp_dp_type = PCIE_DP_Type_RP;

is defining_dev3_capability;

param dev3_offset = exp_next_ptr;

param dev3_next_ptr = dev3_offset + 0x100;

}

method post_init() {

pcie_device.connected(bridge.downstream_port.obj, 0);

}

}

}

port upstream_messages is (init_mt) {

implement transaction_translator {

method translate(uint64 addr,

access_t access,

transaction_t *t,

exception_type_t (*callback)(translation_t txl,

transaction_t *tx,

cbdata_call_t cbd),

cbdata_register_t cbdata) -> (exception_type_t) default {

local pcie_msg_route_t route =

ATOM_get_transaction_pcie_msg_route(t);

local const uint8* seg_id =

ATOM_transaction_pcie_destination_segment(t);

local translation_t txl;

switch (route) {

case PCIE_Msg_Route_ID:

if (seg_id != NULL && *seg_id < nbr_segments) {

txl.target = segment[*seg_id].bridge.downstream_port.map_target;

}

break;

case PCIE_Msg_Route_Upstream:

txl.target = dev.message.map_target;

break;

default:

log error: "%s, Unexpected pcie routing type: %d", this.qname, route;

return Sim_PE_IO_Not_Taken;

}

if (txl.target) {

log info, 2:

"Forwarding messages: %s, %s, segment=%d, address=0x%x, to: %s",

pcie_message_type_name(ATOM_get_transaction_pcie_msg_type(t)),

pcie_route_type_name(ATOM_get_transaction_pcie_msg_route(t)),

*seg_id,

addr,

SIM_object_name(SIM_map_target_object(txl.target));

}

return callback(txl, t, cbdata);

}

}

}

port message is (message_port);

Note! As of PCIe 6.1, DOE protocols are now referred to as DOE features.

Data Object Exchange (DOE) is supported and can be implemented in a device using

the doe_capability template. This template by default implements DOE Discovery

protocol. New protocols can be added easily by adding entries in the doe group

of the doe_capability. The DOE Discovery protocol implementation will detect

and report those accordingly with no modifications to the DOE Discovery

implementation. Below is an example of implementing DOE in a PCIe device with a

custom DOE protocol.

Figure 15. Example of device with DOE and a custom DOE protocol

dml 1.4;

device endpoint;

import "pcie/common.dml";

is pcie_endpoint;

bank pcie_config {

register capabilities_ptr { param init_val = 0x40; }

is defining_doe_capability;

param doe_offset = 0x40;

param doe_next_ptr = doe_offset + 0x100;

param doe_mailbox_len = 50;

param doe_interrupt_support = true;

group doe {

group protocols {

group test_protocol is doe_test_protocol {}

}

}

}

template doe_test_protocol is doe_protocol {

param vendor_id = 0x00FF;

param data_object_type = 1;

method handle_request(const uint32 *req, uint18 req_len) {

local uint32 res[3];

local doe_header_t res_header;

res_header.vendor_id = vendor_id;

res_header.data_object_type = data_object_type;

res_header.length = 3;

memcpy(res, &res_header, sizeof(res_header));

res[2] = 0xABCDEF;

try {

mailbox.push_buffer(res, sizeof(res) / sizeof(res[0]));

response_ready(sizeof(res) / sizeof(res[0]));

} catch {

response_error();

}

}

}

Pushing the response can be done with either the push or the push_buffer

methods of the mailbox. Each handling of a data object must conclude with

either a response_ready(res_len) or response_error() invocation.

The template pcie_phy adds a port to a device with the name phy. This is

intended to be used as a target for transactions which are related to the

physical layer in PCIe. The current default transaction handler in this port

handles transactions that contain the pcie_link_negotiation atom. It will try

to do link training by comparing the incoming max speed/width with its own max

speed/width and let the transaction initiator know the maximum common value of

the respective property. This is essentially a simplification of the T1 and T2

ordered sets that are actually communicated in a real PCIe link. Transactions in

this layer are expected to have a BDF in address[31:16]. The bus number is

unused as the transactions only traverse over one link. The function is

currently also unused as the transaction will end up in the top-level of a

device.

Take the standard PCIe switch distributed as part of Simics Base. It indicates

support of link speeds and widths using extended capability structures.

Additionally, the supported values have been set in the link registers of the

PCI Express Capability Structure. It also supports Hot-Plug along with having

an attention button and a power indicator. The latter two are useful for

Hot-Plug removal and software reporting status of Hot-Plug operations. Support

for these features are enabled using params found in the exp_capability and

exp_slot templates. This will result in the device emitting interrupts for

Slot and Link related events if software has enabled it. In the case where

interrupts might be generated by firmware in the device rather by hardware in

the device, shared methods found in the exp_slot template can be overridden to

fit a certain use case.

Figure 16. PCIe Switch supporting Hot-Plug and Link Training

dml 1.4;

device standard_pcie_switch;

param classname = "standard-pcie-switch";

param desc = "standard PCIe switch";

param documentation = "A standard PCIe switch with 4 downstream slots that"

+ " contains the mandatory capabilities for a PCIe"

+ " function in all ports.";

import "pcie/common.dml";

param pcie_version = 6.0;

template switch_port is pcie_port {

bank pcie_config {

register device_id { param init_val = 0x0370; }

register vendor_id { param init_val = 0x8086; }

register capabilities_ptr { param init_val = 0x40; }

register bar0 @ 0x10 is (memory_base_address_64) {

param size_bits = 14;

}

is defining_pm_capability;

param pm_offset = capabilities_ptr.init_val;

param pm_next_ptr = pm_offset + 0x08;

is defining_msix_capability;

param msix_offset = pm_next_ptr;

param msix_next_ptr = msix_offset + 0x0C;

param msix_num_vectors = 32;

param msix_table_offset_bir = 0;

param msix_pba_offset_bir = (0x10 * msix_num_vectors) << 3;

param msix_data_bank = msix_data;

is defining_exp_capability;

param exp_offset = msix_next_ptr;

param exp_next_ptr = 0x0;

group exp {

param has_links = true;

group link {

param max_link_speed = PCIE_Link_Speed_32;

param max_link_width = PCIE_Link_Width_x8;

}

}

is defining_dlf_capability;

param dlf_offset = 0x100;

param dlf_next_ptr = dlf_offset + 0x0C;

is defining_pl16g_capability;

param pl16g_offset = dlf_next_ptr;

param pl16g_next_ptr = pl16g_offset + 0x28;

param pl16g_max_link_width = PCIE_Link_Width_x8;

param pl16g_max_lanes = PCIE_Link_Width_x8;

is defining_pl32g_capability;

param pl32g_offset = pl16g_next_ptr;

param pl32g_next_ptr = 0;

param pl32g_max_lanes = PCIE_Link_Width_x8;

}

bank msix_data is msix_table {

param msix_bank = pcie_config;

}

}

subdevice usp is (pcie_upstream_port, switch_port) {

bank pcie_config {

param exp_dp_type = PCIE_DP_Type_UP;

}

}

subdevice dsp[i < 4] is (pcie_downstream_port, pcie_link_training,

switch_port, post_init) {

bank pcie_config {

param exp_dp_type = PCIE_DP_Type_DP;

group exp {

param has_hotplug_capable_slot = true;

param has_attention_button_slot = true;

group slot {

param has_power_indicator = true;

}

}

}

method post_init() {

pcie_device.connected(usp.downstream_port.obj, i << 3);

}

}

Note that the downstream ports also have to instantiate the template

pcie_link_training for link training support. This will ensure that when a

device is connected, link training will be initiated to the device on the other

side of the link. For link training to be successful, the device on the other

side of the link also has to have a function(s) that contain link attributes in

their PCIe Express Capability Structure (for example by setting the params

max_link_speed and max_link_width in the link group) as done for the switch

in the example above.

There are 3 (in-band) error signaling mechanisms defined in the PCIe

specification:

- Completion status

- Error messages

- Error forwarding / data poisoning

The PCIe library has support for the first two mechanisms to various degrees (as

discussed below).

A completion packet in PCIe includes a completion status field in the its

header. This is implemented in the library as an atom pcie_error_ret. The

library sets this in some occasions when handling a request, but it can also be

set by the user of the library in applicable contexts. The requester is then

able to inspect the pcie_error_ret atom to determine if the request was

successful or not.

Error messages are messages sent by functions in the PCIe hierarchy that

encounter errors (device specific internal errors or errors defined in the PCIe

specification) to the Root Complex (more specifically the applicable root port).

These messages could also be sent by root complex integrated endpoints, where

they instead would be sent to a Root Complex Event Collector (RCEC) if implemented.

Note that the library currently has no functional support for Error Messages

being collected by an RCEC.

PCIe functions may utilize the emit_errors group in the config_bank to

submit errors using either emit_errors.submit_correctable_error(),

emit_errors.submit_uncorrectable_error() or

emit_errors.submit_uncorrectable_error_advisory_non_fatal(). The library will

then set the applicable status bits in the PCI configuration header as well as

in the PCI Express Capability Structure. It will however currently not set

applicable AER bits and will not consider applicable AER mask and severity bits.

Disregarding AER, if software otherwise has configured the device to send error

messages for an error type, the library will send these messages automatically.

Root ports (that have instantiated pcie_root_port) may instantiate the

handling_err_messages template in their local message target port (by default

the upstream_message port in root port). In this instantiation, the

handle_error_message() method can be implemented to handle incoming error

signaling messages coming from downstream functions.

The ATS capability is modelled with template ats_capability.

See chapter Extended Capabilities templates

for the template and its methods definitions.

Template ats_upstream_translator shall be used for Root Complexes

to handle upstream ATS transactions. See chapter Bridge and Type 1 templates

for the template and its method definitions.

Translation requests and completions are modelled as a Simics read transaction with a payload.

The transaction contains the following atoms to represent request and completion:

ATOM_pcie_atATOM_pcie_byte_count_retATOM_pcie_ats_translation_request_cxl_srcATOM_pcie_ats_translation_request_no_writeATOM_pcie_pasid

ATOM_pcie_at shall be set to PCIE_AT_Translation_Request.

ATOM_pcie_byte_count_ret shall be set by TA to present how many valid

completion entries it has filled into the read payload. ATOM_pcie_byte_count_ret

is set to 4 * the number of valid completion entries.

The transaction payload consists of one or more entries of type

pcie_ats_translation_completion_entry_t to be filled in by the Translation Agent.

ATC Endpoints can use the translation_request method in template ats_capability to

issue an Address Translation Request.

Root complexes shall override the default issue method on port ats_request

to implement the Translation Request logic.

Invalidations are modelled with two PCIe messages represented by two Simics write transactions:

PCIE_ATS_Invalidate (Invalidation Request Message)PCIE_ATS_Invalidate_Completion (Invalidation Completion Message)

The invalidation request message consists of the following atoms together with a payload:

ATOM_pcie_ats_invalidate_request_itagATOM_pcie_pasid

The payload is of type pcie_ats_invalidate_request_payload_t and has the same content

as the message body in the PCIe specification.

The Root Complex can use method ats_invalidate, defined in template ats_upstream_translator,

to send an ATS invalidation request message.

When the ATC endpoint receives the invalidation request method, invalidate_received,

in template ats_capability will be called. The device model has to override the default

method and add the required logic to handle the invalidation.

The ATC endpoint can use method invalidate_complete in template ats_capability

to send the invalidation completion message back to the TA.

The Root Complex can instantiate template handling_ats_messages to start accepting

ATS invalidate completion messages. It has to override method

ats_invalidate_completion to include its invalidation completion logic.

The instantiation of template handling_ats_messages

has to be done under the port that inherits the message_port template.

For Root Complexes inheriting template pcie_bridge the port is defined

as port message. See chapter Bridge and Type 1 templates

for definitions of these templates.

ATS translated/untranslated request uses ATOM_pcie_at and shall

be set to either PCIE_AT_Translated or PCIE_AT_Untranslated.

For endpoints doing DMA, methods: memory_write_bytes and memory_read_buf

in template ats_capability can be used to easily construct AT memory requests.

For Root Complexes ports ats_translated and ats_untranslated

defined in template ats_upstream_translator receives all incoming

AT memory requests. The device model has to override the default implementations

of these ports to achieve the desired behaviour.

The Page Request Services are modelled with the following atoms:

ATOM_pcie_prs_page_requestATOM_pcie_prs_page_group_responseATOM_pcie_prs_stop_markerATOM_pcie_pasid

ATOM_pcie_prs_page_request is of data type pcie_prs_page_request_t.

ATOM_pcie_prs_page_group_response is of data type pcie_prs_page_group_response_t

and its response codes are listed in enum pcie_prs_response_code_t. ATOM_pcie_prs_stop_marker is just a bool.

The Root Complex can instantiate template handling_prs_messages to start accepting

PRS Request Page and PRS Stop Marker messages. It has to override method

page_request_receive to include the logic to manage Page Request and Stop Marker

messages. The instantiation of template handling_prs_messages

has to be done under the port that inherits the message_port template.

For Root Complexes inheriting template pcie_bridge the port is defined

as port message. See chapter Bridge and Type 1 templates

for definitions of these templates. The Root Complex can respond to the

Page requests by using method page_group_response which is part of template

handling_prs_messages.

For Endpoints template prs_capability instantiates all PRS logic and registers

provided by the PCIe library. See chapter Extended Capabilities templates

for the template and its methods definitions.

Figure 17. Example of DMA Endpoint.

dml 1.4;

device sample_pcie_ats_prs_dma;

param classname = "sample-pcie-ats-prs-dma";

param desc = "sample PCIe Endpoint utilizing ATS and PRS for DMA";

param documentation = "DMA endpoint with eight concurrent channels."

+ " Each DMA channel starts with allocating the necessary pages"

+ " using PRS. The DMA then performs an ATS translation followed"

+ " by the actual DMA operating on the translated addresses."

+ " After the DMA is finished it issues a stop marker message to the TA"

+ " to free up the pages.";

param pcie_version = 6.0;

import "pcie/common.dml";

is pcie_endpoint;

param NBR_CHANNELS = 8;

method umin(uint64 a, uint64 b) -> (uint64) {

return a < b ? a : b;

}

connect device_memory is (map_target) {

param documentation = "Memory in device endpoint";

param configuration = "required";

}

connect irq_dma_done[i < NBR_CHANNELS] is signal_connect {

param documentation = "Interrupt signal raised by DMA channel"

+ " when it is finished";

}

bank pcie_config {

register capabilities_ptr {

param init_val = 0x40;

}

is defining_pm_capability;

param pm_offset = capabilities_ptr.init_val;

param pm_next_ptr = pm_offset + 0x10;

is defining_exp_capability;

param exp_offset = pm_next_ptr;

param exp_next_ptr = 0x0;

param exp_dp_type = PCIE_DP_Type_EP;

is defining_ats_capability;

param ats_offset = 0x100;

param ats_next_ptr = ats_offset + 0x100;

is defining_pasid_capability;

param pasid_offset = ats_next_ptr;

param pasid_next_ptr = pasid_offset + 0x20;

group pasid {

register capability {

field trwps { param init_val = 1; }

// pasid in range 0 - 0xfffff

field mpw { param init_val = 0x14; }

}

}

is defining_prs_capability;

param prs_offset = pasid_next_ptr;

param prs_next_ptr = 0;

group prs {

register status {

field pasid { param init_val = 1; }

}

method page_response_received(transaction_t *t,

uint64 addr) -> (bool) {

if (ATOM_transaction_pcie_prs_page_group_response(t) != NULL

&& ATOM_transaction_pcie_pasid(t) != NULL) {

local pcie_prs_page_group_response_t msg = {

.u16 = ATOM_get_transaction_pcie_prs_page_group_response(t),

...

};

local pcie_pasid_info_t pasid = {

.u32 = ATOM_get_transaction_pcie_pasid(t),

...

};

for (local int i = 0; i < dma.len; i++)

if (dma[i].prs_page_response(msg, pasid))

return true;

return false;

} else {

log error:

"%s, Expected atoms pcie_prs_page_group_response"

+ " and pcie_pasid", this.qname;

return false;

}

}

}

}

bank regs {

param register_size = 8;

group channel[i < NBR_CHANNELS ] {

register dma_dev @ 0x0 + i * 0x30 {

field addr @ [63:12] "64-bit device address for DMA";

}

register dma_host @ 0x8 + i * 0x30 {

field addr @ [63:12] "64-bit host address for DMA";

}

register dma_len @ 0x10 + i * 0x30 {

param documentation = "Max 64k for single DMA transfer";

field len @ [15:0];

}

register dma_start @ 0x18 + i * 0x30 {

field start @ [31] "Start DMA" {

is write;

method write(uint64 value) {

if (value == 1) {

if (dma_status.busy.get() != 0) {

log spec_viol: "Cannot start DMA while busy!";

return;

}

local uint64 haddr = dma_host.addr.val << 12;

local int lsbit = pcie_config.ats.control.stu.lsbit();

if (haddr[lsbit - 1:0] != 0) {

log spec_viol:

"DMA host address must be ATS STU aligned";

return;

}

dma[i].start(haddr,

dma_dev.addr.val << 12,

dma_len.len.val,

pasid.pasid.val,

rnw.val ? true : false);

}

}

}

field rnw @ [0] "DMA Read from host = 1, Write to host = 0";

}

register dma_status @ 0x20 + i * 0x30 {

field busy @ [0] "DMA is busy with ongoing transfer" {

is (read, get);

method read() -> (uint64) {

return get();

}

method get() -> (uint64) {

return dma[i].pending ? 1 : 0;

}

}

}

register pasid @ 0x28 + i * 0x30 {

field pasid @ [19:0] "PASID to be used for DMA transfer";

}

}

}

group dma[n < NBR_CHANNELS] {

saved bool pending;

saved uint64 host_addr;

saved uint64 dev_addr;

saved uint32 size;

saved bool is_read;

saved uint20 pasid;

method start(uint64 host_addr,

uint64 dev_addr,

uint32 size,

uint20 pasid_value,

bool is_read) {

assert(!pending);

this.pending = true;

this.host_addr = host_addr;

this.dev_addr = dev_addr;

this.size = size;

this.is_read = is_read;

this.pasid = pasid_value;

this.request_pages();

}

method request_pages() {

local int nbr_stus =

pcie_config.ats.translation_size_to_entries(size);

local uint64 stu_size = pcie_config.ats.control.stu.size();

local int nbr_pages = nbr_stus * stu_size / 4096;

for (local int i = 0; i < nbr_pages; i++) {

local pcie_prs_page_request_t request = {

.field = {

.r = is_read ? 1 : 0,

.w = is_read ? 0 : 1,

.l = i == (nbr_pages - 1) ? 1 : 0,

.prgi = n,

.page_addr = (this.host_addr + (i * 4096)) >> 12,

},

...

};

local pcie_pasid_info_t p = { .field = { .pasid = this.pasid, ...}, ...};

local pcie_error_t ret = pcie_config.prs.page_request(request, &p);

if (ret != PCIE_Error_No_Error) {

log error:

"%s PRS request denied %s", this.qname, pcie_error_name(ret);

return;

}

}

}

method prs_page_response(pcie_prs_page_group_response_t msg,

pcie_pasid_info_t p) -> (bool) {

if (!this.pending)

return false;

if (p.field.pasid == this.pasid && msg.field.prgi == n) {

if (msg.field.response_code == PCIE_PRS_Response_Success) {

after: try_ats_and_dma();

} else {

log info, 1: "Page response indicated error: %s",

pcie_config.prs.response_code_name(msg.field.response_code);

this.pending = false;

}

return true;

} else {

return false;

}

}

method try_ats_and_dma() {

local int nbr_entries =

pcie_config.ats.translation_size_to_entries(size);

local pcie_ats_translation_completion_entry_t entries[nbr_entries];

local bool no_write = is_read;

// For the DMA the PRS operate on page size, having an STU

// greater than 4096 can lead to the follow up ATS request

// to be shifted to align with STU, thus pages not allocated by PRS

// can here be requested which is not desired. To prevent this

// the DMA must align its host address to STU to ensure 1:1 mapping

// between PRS requests and ATS translation requests

local int stu_lsb = pcie_config.ats.control.stu.lsbit();

assert(host_addr[stu_lsb - 1:0] == 0);

local pcie_pasid_info_t p = { .field = { .pasid = this.pasid, ...}, ...};

local pcie_error_t ret;

local int valid_entries;

(ret, valid_entries) =

pcie_config.ats.translation_request(host_addr,

entries,

nbr_entries,

&p,

no_write,

false);

if (ret != PCIE_Error_No_Error) {

log error:

"%s ATS request denied %s", this.qname, pcie_error_name(ret);

return;

}

for (local int i = 0; i < valid_entries; i++) {

local (uint64 translated_addr, uint64 txl_size) =

pcie_config.ats.get_translation_range(entries[i]);

local uint64 dma_size = umin(txl_size, this.size);

try {

do_dma(translated_addr, dev_addr, dma_size, is_read);

} catch {

log error:

"DMA %s failed for ATS address 0x%08X, device address: 0x%08X",

is_read ? "Read" : "Write", translated_addr, dev_addr;

return;

}

this.size -= dma_size;

this.dev_addr += dma_size;

}

assert(this.size == 0);

free_pages();

this.pending = false;

irq_dma_done[n].set_level(1);

irq_dma_done[n].set_level(0);

}

method do_dma(uint64 translated_addr,

uint64 dev_addr,

uint32 size,

bool is_read) throws {

if (is_read)

dma_read(translated_addr, dev_addr, size);

else

dma_write(translated_addr, dev_addr, size);

}

method dma_write(uint64 translated_addr,

uint64 dev_addr,

uint32 size) throws {

local uint8 data[size];

local bytes_t buf = { .data = data, . len = size };

device_memory.read_bytes(dev_addr, size, data);

local pcie_pasid_info_t p = { .field = { .pasid = this.pasid, ...}, ...};

local pcie_error_t ret;

ret = pcie_config.ats.memory_write_bytes(buf,

translated_addr,

PCIE_AT_Translated,

&p);

if (ret != PCIE_Error_No_Error)

throw;

}

method dma_read(uint64 translated_addr,

uint64 dev_addr,

uint32 size) throws {

local uint8 data[size];

local buffer_t buf = { .data = data, . len = size };

local pcie_pasid_info_t p = { .field = { .pasid = this.pasid, ...}, ...};

local pcie_error_t ret;

ret = pcie_config.ats.memory_read_buf(buf,

translated_addr,

PCIE_AT_Translated,

&p);

if (ret != PCIE_Error_No_Error)

throw;

device_memory.write_bytes(dev_addr, size, data);

}

method free_pages() {

local pcie_pasid_info_t p = { .field = { .pasid = this.pasid, ...}, ...};

local pcie_error_t ret = pcie_config.prs.send_stop_marker(&p);

if (ret != PCIE_Error_No_Error) {

log error: "Failed to free pages for PASID %d: %s",

p.u32, pcie_error_name(ret);

}

}

}

The sample device below implements an ATC using the ATS framework in

the library. Port device_memory_request handles incoming untranslated

transactions from the device and forwards them ATS translated upstream.

Implemented features:

- Internal

cache to store previous ATS translation completions.

- Different access rights for read/write/execute transactions.

- Any STU size set by the ATS capability control register (

pcie_config.ats.control).

- Translations consisting of multiple STUs and completion entries.

- Contiguous virtual address space mapped to separate physical STUs.

- ATS Translation Invalidation

- Checkpointing

- PASID

Note: Current implementation does not support checkpointing of deferred transactions.

Example use cases:

- Endpoint Device with DMA

- Accelerator accessing host memory

Figure 18. Example of Endpoint Implementing an Address Translation Cache.

dml 1.4;

device sample_pcie_ats_endpoint;

param classname = "sample-pcie-ats-endpoint";

param desc = "sample PCIe Endpoint with an ATS Cache";

param pcie_version = 6.0;

import "simics/util/interval-set.dml";

import "pcie/common.dml";

is pcie_endpoint;

attribute PASID is (uint64_attr);

method umax(uint64 a, uint64 b) -> (uint64) {

return a > b ? a : b;

}

bank pcie_config {

register capabilities_ptr {

param init_val = 0x40;

}

is defining_pm_capability;

param pm_offset = capabilities_ptr.init_val;

param pm_next_ptr = pm_offset + 0x10;

is defining_exp_capability;

param exp_offset = pm_next_ptr;

param exp_next_ptr = 0x0;

param exp_dp_type = PCIE_DP_Type_EP;

is defining_ats_capability;

param ats_offset = 0x100;

param ats_next_ptr = ats_offset + 0x100;

group ats {

// Method called by PCIe library when an invalidation request message

// is received for Translation Agent.

method invalidate_received(transaction_t *t,

uint64 dev_addr) -> (bool) {

local pcie_ats_invalidate_request_payload_t payload;

payload.u64 = SIM_get_transaction_value_le(t);

local uint8 itag = ATOM_get_transaction_pcie_ats_invalidate_request_itag(t);

local uint16 requester_id =

ATOM_get_transaction_pcie_requester_id(t);

local (uint64 addr, uint64 size) = this.get_invalidation_range(payload);

cache.evict(addr, size);

// Must inform Simics core the translation has been revoked.

// Look at documentation for SIM_translation_changed

// for more details.

SIM_translation_changed(device_memory_request.obj);

after: this.respond(requester_id, 1 << itag);

return true;

}

method respond(uint16 requester_id, uint32 itag_vector) {

// Calls helper method in PCIe lib to send Invalidation Completion

// message to Translation Agent.

local pcie_error_t ret = this.invalidate_complete(requester_id, itag_vector);

if (ret != PCIE_Error_No_Error) {

log error: "%s failed: %s",

pcie_message_type_name(PCIE_ATS_Invalidate_Completion),

pcie_error_name(ret);

}

}

}

is defining_pasid_capability;

param pasid_offset = ats_next_ptr;

param pasid_next_ptr = 0;

group pasid {

register capability {

field eps { param init_val = 1; }

field pms { param init_val = 1; }

field trwps { param init_val = 1; }

// pasid in range 0 - 0xffff

field mpw { param init_val = 0x10; }

}

}

}

// The endpoint device uses this port to handle untranslated memory requests

// which the ATC tries to convert to a translated memory request

// before forwarding the transaction upstream.

port device_memory_request {

implement transaction_translator {

method translate(uint64 addr,

access_t access,

transaction_t *prev,

exception_type_t (*callback)(translation_t txl,

transaction_t *tx,

cbdata_call_t cbd),

cbdata_register_t cbdata) -> (exception_type_t) {

local translation_t txl;

local bool hit;

local (uint64 base, uint64 start, uint64 size);

local pcie_ats_translation_completion_entry_t te;

(hit, base, start, size, te) = lookup_address(addr, prev, access);

txl.base = base;

txl.start = start;

txl.size = size;

if (!hit) {

assert(txl.base == txl.start);

log info, 4:

"Missed translation in range 0x%08X-0x%08X access=0x%x",

txl.base, txl.base + txl.size - 1, access;

return callback(txl, prev, cbdata);

}

local transaction_t t;

local bool add_pasid;

local pcie_pasid_info_t pasid;

// AT translated requests are only allowed if field trwpe is set

// Untranslated does not require that bit

if (pcie_config.pasid.control.pe.val == 1 &&

(te.field.u == 1 || pcie_config.pasid.control.trwpe.val == 1)) {

add_pasid = true;

pasid.field.pasid = PASID.val;

pasid.field.exe = (access & Sim_Access_Execute) != 0 ? 1 : 0;

}

local atom_t atoms[5] = {

ATOM_pcie_type(PCIE_Type_Mem),

ATOM_pcie_requester_id(pcie_config.get_device_id()),

ATOM_pcie_at(te.field.u == 1 ? PCIE_AT_Untranslated : PCIE_AT_Translated),

add_pasid ? ATOM_pcie_pasid(pasid.u32) : ATOM_list_end(0),

ATOM_list_end(0),

};

t.prev = prev;

t.atoms = atoms;

txl.target = upstream_target.map_target;

log info, 3: "Translating range 0x%08X-0x%08X to 0x%08X-0x%08X",

txl.base, txl.base + txl.size - 1,

txl.start, txl.start + txl.size - 1;

return callback(txl, &t, cbdata);

}

}

}

attribute extra_atom_in_translation is (bool_attr);

// Sends ATS request to Translation Agent.

// Utilizes several helper methods defined in the ATS capability template.

method do_ats_request(uint64 addr,

uint64 size,

access_t access) -> (exception_type_t) {

local uint64 atc_size = size + addr[11:0];

local int nbr_entries =

pcie_config.ats.translation_size_to_entries(atc_size);

local pcie_ats_translation_completion_entry_t entries[nbr_entries];

local bool no_write = (access & Sim_Access_Write) == 0;

local pcie_error_t ret;

local int valid_entries;

local int stu_lsb = pcie_config.ats.control.stu.lsbit();

local uint64 base_addr = addr[63:stu_lsb] << stu_lsb;

local bool add_pasid;

local pcie_pasid_info_t pasid;

if (pcie_config.pasid.control.pe.val) {

add_pasid = true;

pasid.field.pasid = PASID.val;

if (pcie_config.pasid.control.epe.val)

pasid.field.exe = (access & Sim_Access_Execute) != 0 ? 1 : 0;

}

if (extra_atom_in_translation.val) {

local atom_t extra_atoms[2] = {

ATOM_pcie_ecs(PCIE_ECS_SIG_OS),

ATOM_list_end(0)

};

(ret, valid_entries) = pcie_config.ats.translation_request_custom(

base_addr, entries, nbr_entries, add_pasid ? &pasid : NULL,

no_write, false, extra_atoms);

} else {

(ret, valid_entries) = pcie_config.ats.translation_request(base_addr,

entries,

nbr_entries,

add_pasid ? &pasid : NULL,

no_write,

false);

}

switch(ret) {

case PCIE_Error_No_Error:

for (local int i = 0; i < valid_entries; i++) {

local (uint64 start, uint64 txl_size) =

pcie_config.ats.get_translation_range(entries[i]);

cache.insert(base_addr, txl_size, entries[i]);

base_addr += txl_size;

}

return Sim_PE_No_Exception;

case PCIE_Error_Unsupported_Request:

log info, 1:

"%s ATS request denied %s",

this.qname, pcie_error_name(ret);

return Sim_PE_IO_Not_Taken;

default:

log error:

"%s error in ATS translation request %s",

this.qname, pcie_error_name(ret);

return Sim_PE_IO_Not_Taken;

}

}

// Check internal AT Cache for translation, otherwise

// it tries to do an ATS request followed by a second cache lookup.

method lookup_address(uint64 addr, transaction_t *t, access_t access) ->

(

bool, // Hit

uint64, // Base

uint64, // Translated address

uint64, // size

pcie_ats_translation_completion_entry_t // TA completion entry

) {

local uint64 lookup_size = umax(SIM_transaction_size(t),

pcie_config.ats.control.stu.size());

local (bool hit,

uint64 base,

uint64 start,

uint64 size,

pcie_ats_translation_completion_entry_t te) = cache.lookup(addr, access);

if (!hit) { // Try do an AT request

if (SIM_transaction_is_inquiry(t))

return (false, base, start, size, te);

local exception_type_t v = do_ats_request(addr, lookup_size, access);

if (v != Sim_PE_No_Exception)

return (false, base, start, size, te);

(hit, base, start, size, te) = cache.lookup(addr, access);

assert(hit);

}

return (true, base, start, size, te);

}

// Sample cache to showcase basics for implementing ATS with the PCIe library.

// Utilizes the interval library in Simics core.

group cache is (init) {

session interval_set_t map;

method init() {

init_interval(&map, 1);

}

attribute storage {

param documentation = "Attribute to support checkpointing of the AT Cache";