Model Builder User's Guide

This document shows how to model your hardware system in Simics and how to extend Simics with new functionality. This document does not stand alone. The Simics and DML reference manuals are invaluable for the details. It is not intended to be read from start to finish. After reading the introduction part feel free to read about the topics which interest you.

You need the Simics Model Builder product to develop models and extensions for Simics.

This document is divided into several parts:

- Introduction and Preparation

This part contains an introduction to modeling virtual system and helps you prepare for modeling by telling you how to set up your system for Simics Model Builder. The introduction to modeling is recommended for all readers. This provides an overview of the way you model your hardware in Simics and how to map hardware concepts to Simics concepts.

- Device Modeling

This part describes the device modeling concepts and introduces DML, the tool used for writing device models. DML is designed to device modeling easier and remove a lot of the repetitive and error prone work you have to do if you develop your device model in for example C. It also includes chapters on writing new commands for the Simics command line interface and how to define new interfaces between device models.

- Modeling Common Hardware Components

This part builds on the previous part and shows you how to model some common kinds of devices in Simics.

- Creating Virtual Systems

This part shows you how to assemble the parts of a virtual system into a complete system. It also shows you how to deal with memory and address spaces in Simics. This is one of the most abstract parts of modeling a system in Simics and tries to map how software sees the hardware.

- Simics API

This part explains the Simics API; its major concepts, how it is structured, how it evolves, and some rules and conventions for how to use it. It also explains how the API interacts with Simics's multithreading support, and how to make your modules safe to use in multithreaded simulations.

This document focuses on modeling the behavior of a system. Read the Analyzer User's Guide for information on modeling timing and caches in Simics.

The introduction chapter you are reading describes the concepts of system modeling and how they map to modeling in Simics. It also discusses the kind of information that must be gathered to model a system. After reading it you should have a basic understanding of what modeling is and what information about the hardware you need to gather.

Simics provides a full systems simulation environment for CPUs, boards, platforms, racks and even very complex heterogeneous systems networked together. A model provides the software running on a virtual platform within the Simics environment with a representation of the hardware interface that is exposed to the software, i.e., a "programmer's view".

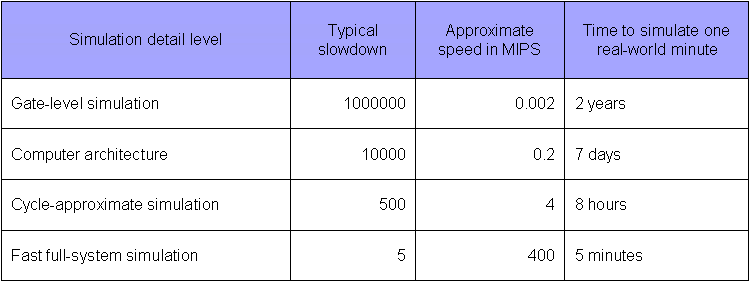

When creating models, it is important to choose the right level of abstraction. A comparison can be made with the field of physics, where different models are used for solving different types of problems. For example, on the sub-atomic scale quantum mechanics is used, to compute the motion of Earth-bound objects classical mechanics is used, and for objects moving at high speed relativistic models are used. Choosing the wrong model for a problem will either make it intractable or will not provide enough detail to yield correct results. The same goes for models of digital systems where a number of different abstraction levels are typically used. As more details are added to the simulation, the slower it will run and the more costly it will be to develop. There exists a trade off between simulation speed and model fidelity. Figure 1 shows the characteristics for some commonly used abstractions. Note that the figure serves as an illustration and actual performance may vary substantially even within a specific abstraction.

Figure 1. Simulation Detail vs Simulation Speed.

A Simics functional model presents the software with a functionally accurate view into the virtualized hardware. Functional models are transaction based, applying a blackbox/transfer function approach where it is not important to duplicate how a specific result is obtained, but to duplicate what the exact transfer function will result in. For timing-critical sections (e.g., device drivers response to interrupts, etc.), Simics provides the ability to insert delays that can be fine-tuned to model not only what data is provided, but also when that data is provided to the remainder of the system.

Using this approach, a functional model can be built based solely on hardware design and interface documents combined with some knowledge of the expectations and requirements of the operating system(s) running on top of that hardware. When building a functional model, there is no need to have detailed knowledge on the inner workings of the platform or device.

Today’s systems consist of many components including multi-core CPUs, multiple platforms, accelerators, racks, and heterogeneous combinations of CPU, DSP, ASIC and FPGA all connected via standard network, or backplane communication mechanisms. Although many simulation environments and the models which they run are specific to a specific CPU core or maybe a System on Chip (SoC), these solutions fail to support the complete system. Simics support for heterogeneous models and distributed host simulation processing provides the ability to model very complex systems while retaining a level of performance that is usable for real software development tasks.

A Simics system model may consist of tens, hundreds or thousands of smaller, independent models (CPU, platform, rack, etc.), any one of which can be created by an independent engineer and/or in different modeling languages. These smaller models, standing alone from a functional perspective, are connected together using Simics component system and scripting capability, or with one of Simics communications network infrastructure models (TCP/IP, etc.) in order to provide the full systems model and simulation. This sub-element independence and flexibility allows a full system model to be created from disparate components and by leveraging in-house and 3rd party expertise.

A typical customer’s Simics model team encompasses two key areas of knowledge.

- Functional model knowledge – possessing the ability to translate a hardware block diagram and design documents into the DML language

- Simics scripting and API knowledge – possessing the ability to connect smaller model components and devices as needed to form a larger system using Simics command API, Python or C/C++

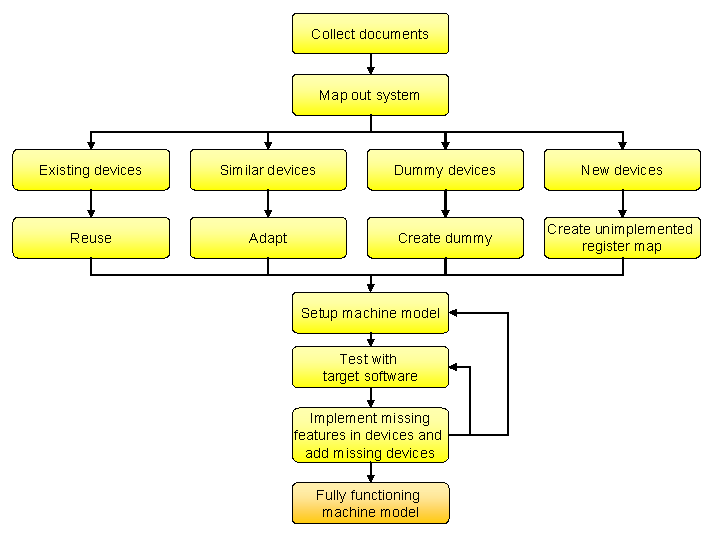

The processes when virtualizing a new system roughly follows this outline:

- Create a list of devices and processors that comprise the system by collecting and reading design specifications, programmers reference manuals, and other relevant documents.

- Based on an analysis of the expected system usage, make a preliminary decision on the necessary level of abstraction necessary for each device. Can it be ignored, stubbed, or does it need to be fully implemented? (e.g., does the RTOS use the MMU? If not, don't model it.)

- Reuse existing device models and processor models from the Simics standard library. The library makes it faster to produce an initial model, since models for many common standard parts already exist. Reuse often means adapting an existing model of a similar device, which is much faster then writing a new model from scratch.

- Create initial models of any remaining devices using the DML tool. Initially, ignore as much functionality as possible to quickly get a working basic model, but write tests for the parts you do implement to make sure they behave as intended. This also helps catch errors which may get introduced when you add more parts to the model.

- Test the new system model with software that should run on the model, and add (or stub out) any missing functionality or devices required by the software. For new devices where no software exist yet, create independent test cases.

- Iterate until the model runs the requisite software.

This methodology is the classic iterative method, where you test the model early and often in order to explore the precise requirements. Historically, this had many names, from spiral model to agile methods or test-driven development.

The goal is to obtain a model which runs the required software, but which implements only the hardware functionality necessary to run the software and exercise its interesting behaviors. Over time, more functionality can be added to the model.

Often, it is possible to start using the new virtual system almost immediately after the project start. Even a basic system that does not yet contain all components can be used to begin software development. For example, a boot loader typically requires less virtual hardware to be in place than a full operating system port. Over time, more devices will be added to the virtual system, and it will evolve towards the final model.

With Simics's modular approach, it is always possible to go back and improve any element of the model.

Figure 2. The system modeling workflow.

In Transaction-Level Device Modeling (TLM), each interaction with a device, typically, a processor reading from or writing to the registers of the devices, is handled at once: the device is presented with a request, computes the reply, and returns it in a single function call. This is far more efficient and easier to program than modeling the details of how bits and bytes are moved across interconnects, cycle-by-cycle.

In general, immediate non-pipelined completion of an operation is sufficient for modeling device's behavior. When the device driver expects a delay, that delay must be modeled, however the specific action or activity that leads to the delay does not need to be modeled. A classic example is a device that uses a hardware interrupt to signal command completion. The driver expects to be able to run code to prepare for the interrupt after writing the command to the device. In a transactional model, the device model must include a delay between the completion of the command and the interrupt signaling the completion to the system. In this manner, the device local effects of a transaction are computed immediately, but notification of completion is deferred until the proper time has elapsed.

Transaction-level models are typically implemented using the DML tool. DML provides a C-like programming language designed specifically for this type of modeling. Although device models can be written directly in C, using DML reduces development time and makes the code more readable and maintainable, and reduces the risk of making errors.

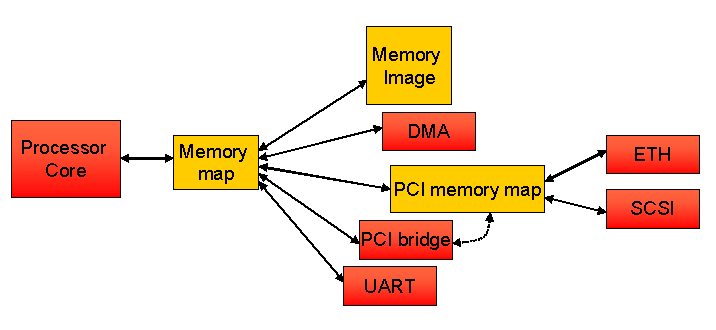

The memory map is a fundamental service provided by the Simics framework, and is a core component in enabling very fast simulation. The address space of a processor is modeled by Simics as a memory map. This approach allows the processor to directly access memories and devices without involving any explicit models of buses. This approach provides a functionally correct memory interface to the software, since software is unaware of how the data gets from the memory to the processor, and results in fast instruction execution and data access. With this approach, Simics's core memory handling is able to provide a virtual system with very fast read/write access to RAM and read access to FLASH and ROM without the requirement to involve any explicit device models.

Even when a Simics model includes a memory controller it is only used to manipulate, initialize or control the underlying activity of the Simics memory system and not as a component which is directly involved in accessing memory.

Simics also provides image objects to manage data for devices with large amounts of memory such as RAM, ROM, Flash, and disks. Image features include lazy allocation of host memory so that only data which is actually used is loaded. This allows Simics to simulate target memories which are larger than the physical memory of the host machine. Images also support incremental checkpoints of the system state.

For the simulation of PCI and similar interfaces where there are several levels of addressing being used, Simics uses subordinate memory maps cascaded from the primary memory map. This makes it easy to translate real-system mappings into the Simics system configuration. This method allows PCI models in Simics to support software probing and configuration, just like real PCI systems. The software setup is reflected in the PCI memory map, and device accesses are kept very efficient.

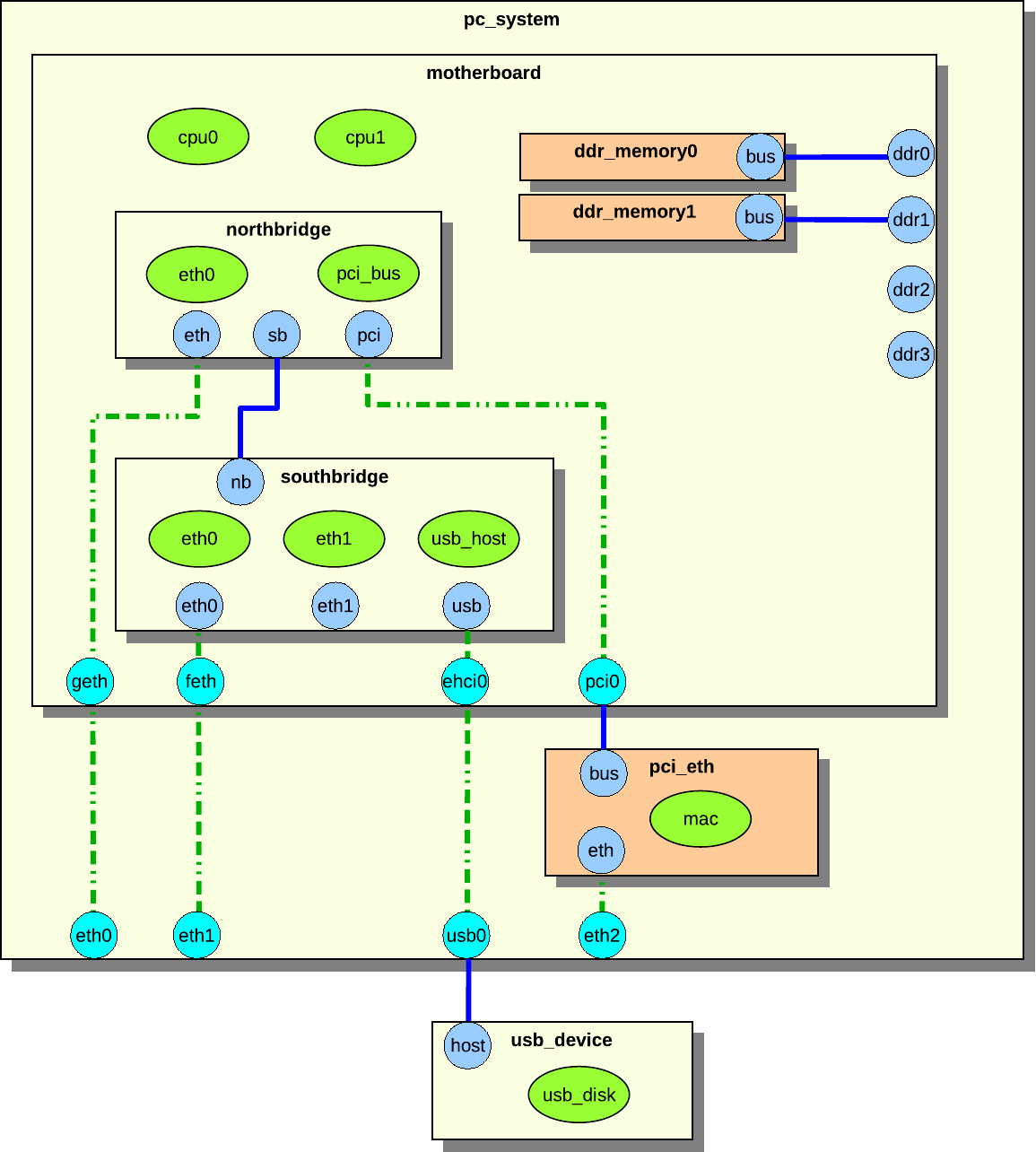

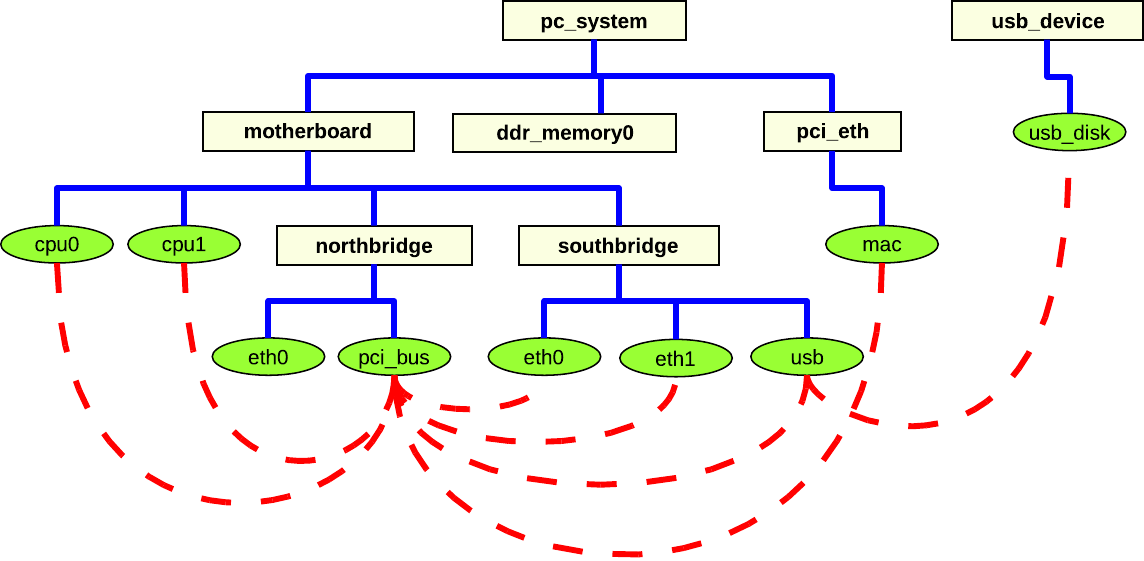

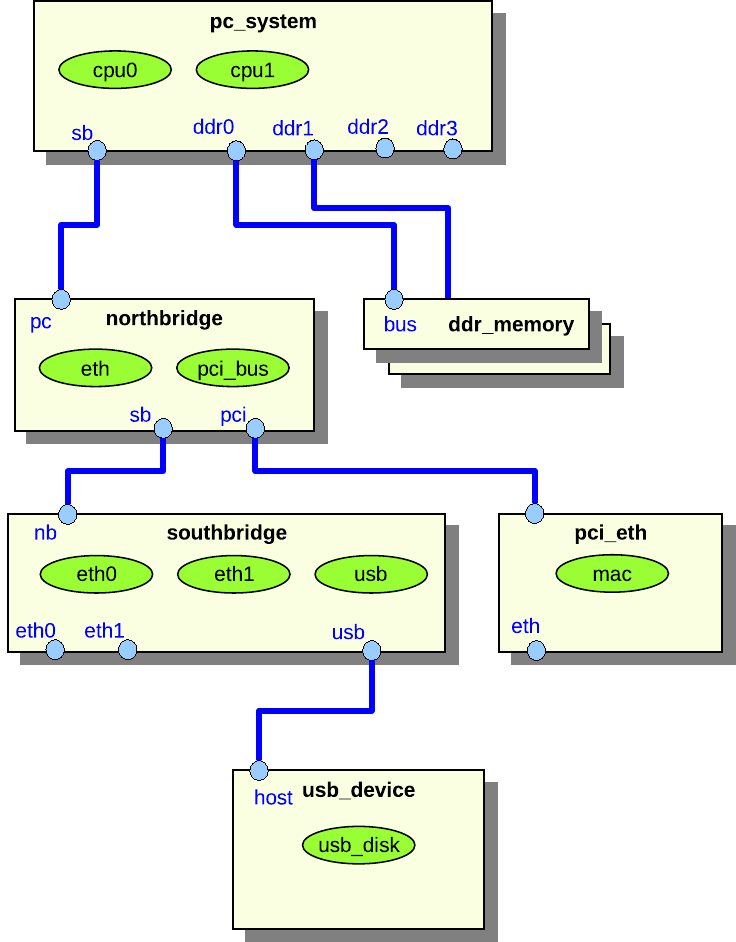

Figure 3. Mapping example.

The illustration in figure 3 shows a simple example of how Simics uses memory spaces to map the processor's memory range to its immediately connected memory and devices, as well as across a PCI system to Ethernet and SCSI controllers. The PCI bridge will configure the PCI memory map when the software configures the PCI system, but will not be invoked in actual accesses to PCI devices.

The DML tool has been developed to support high performance and high level abstraction for all system devices with the exception of processors. When running simulation with functional models of devices the vast majority of host processing is used to simulate the target processors. As a result processor models (sometimes referred to as Instruction Set Simulators (ISS)) provided by Simics teams are highly optimized and tightly integrated into Simics. The result is that, at peak speeds, Simics can run billions of simulated instructions per second.

In order to run all the software of a system, the processor must implement both the user-level and supervisor-level interfaces of the processors, as well as the memory-management unit and various low-level machine registers. Anything that is readable or writable from the software side has to be modeled. Simics provides a large library of fast and functionally complete and correct processor models for most common embedded and desktop architectures including PowerPC, MIPS, ARM, SPARC, and x86.

For customers with pre-existing processor models or who desire to develop their own processor models, Simics provides an API that supports the integration of those models into the Simics framework. See the Processor Model Integration Guide for details.

Recall that a full system model in Simics is comprised of multiple, smaller sub-models and that Simics provides concurrent support for multiple modeling languages. Thus, Simics provides developers with the ability to run or reuse models that were not specifically written for Simics, such as SystemC* models, custom C/C++ models, etc. The most general way to integrate such a model into a Simics simulation is to write a wrapper for the other model to translate its APIs to the Simics framework's C/C++ API.

This approach can be used for almost any type of model, including models designed to run at similar abstraction levels as regular Simics models, or other types of models such as physics or hardware description language (HDL) models. If the abstraction level or APIs used by the foreign model is very different from that of Simics, it may require advanced techniques to do such an integration. Please contact your Simics provider to get more information about options and next steps.

To enable the integration of unmodified SystemC models into a Simics simulation, use the SystemC Library add-on package. Consult the SystemC Library Programming Guide for details. Please contact your Simics provider to get access to the SystemC Library package.

Simics models take a functional approach to modeling where entire transactions are handled in a single function.

-

Models should focus on the what instead of the how.

- Model details are optimized for the software that will run on those models. Details that are irrelevant to software execution do not need to be modeled.

- Explicit pre-defined device states can be easily provided to the software.

-

Models should be incrementally created to support different phases of the product development life cycle.

- Initial model provides just enough emulation in order for the firmware team to begin their efforts.

-

Functional models can be quickly created and connected together like building blocks.

- The system configuration is separate from device models.

Before starting to build a system model, certain information should be collected. Since obtaining documentation can sometimes take time, it is a good idea to start looking for documentation and information as early as possible in a modeling project. Documents and information that Simics professionals have found to be particularly useful are:

- The system documentation, including block diagrams, describing how the components of the system are connected.

- The Programmers Reference Manuals (PRMs) for each of the main chips, devices, or functional blocks. The PRM goes by many names, such as Programming Reference, User Reference Manual, Technical Reference Manual, User Manual, and Reference Manual.

- The source code of the software such as the BSP (Board Support Package), device drivers, firmware, and operating systems, that manipulates the hardware, if it already exists.

Once you have a basic understanding of the system, you should prioritize the list of components that need to be modeled. The following approach works well for many projects:

- Identify the minimum set of components required to boot the software. Having built a functional core model, it is easy to incrementally add devices to the system. Note that the software may have to be reconfigured to use only part of a system. Alternatively identify an existing Simics reference board model that is similar enough to serve as a baseline.

- Identify the components where the most technical risk lie: both risk in developing the model as well as in the software that manipulates the component. This can be time risk or lack of documentation, i.e., anything that can lead to missing the project goals or deadlines.

- Try to identify the components that have no initial impact on your system, i.e., those that will not be manipulated or used by software. They can either be modeled by a dummy model or not modeled at all.

- Try to identify the memories in the systems that can be handled using Simics default memory modeling. This is done by reading the documentation and identifying RAM, ROM, and flash regions in the physical memory map.

Since the introduction of Simics in 1998, the process of building virtual systems has refined into the following fundamental design principles:

- Follow the hardware

- Follow the software

- Do not model unnecessary detail

- Reuse and adapt existing components

- Develop device models in DML

The overriding goal of Simics is to ensure that the software developed on the simulated system will run on the physical hardware and vice versa. A main design principle for the creation of Simics models for a system should be to follow the structure of the physical hardware closely, while abstracting functionality where possible. This includes variations of the hardware configuration. Thus, all software-visible functions have to be accurately represented in the simulation, and the easiest way to ensure this is to design the simulation model using the same component and communications structure as the physical hardware.

The components to use as the basis for understanding and decomposing the hardware system design are typically entire chips for a board design, and function blocks inside a System-on-a-Chip (SoC). From a Simics perspective, an SoC or a board are really equivalent - they both group a number of devices together with a set of processors, and provide interconnects between them. From a practical perspective, a board often contains some SoC devices, and this leads to a recursive process where the board is broken down into SoCs, and the SoCs into devices. Dividing the SoC helps the reuse of the devices when creating a new SoC.

The starting point is thus the layout of a board and the block diagram for a SoC, as presented by the programmer's reference manuals (PRMs). An important source is the memory map typically found in the PRM for both boards and SoCs, showing how devices are presented to the core processor(s) in the system.

Note that some components might be addressed indirectly and not have their own slot in the memory map. A common example is serial EEPROMs accessed over I2C from an I2C controller. The EEPROM is not visible in the memory map, but it is still accessible to the processor and needs to be considered in the model.

The ultimate goal is to have a list of the devices that make up a system and a map of how they are interconnected.

The interconnections between devices also need to be considered in order to have a good breakdown of a system for modeling. Some inter-connections are usually invisible to the software, and thus do not need to be modeled in Simics. A good example are on-chip device interconnects like AMBA, CoreConnect, and OcEAN used in various SoC designs. These interconnects are implemented using complex crossbar technology and bus arbitration which is not visible at all in Simics. Also, the hierarchy of buses used in interconnects like AMBA with its high-speed and low-speed buses is invisible. Simics goes straight to the resulting memory map.

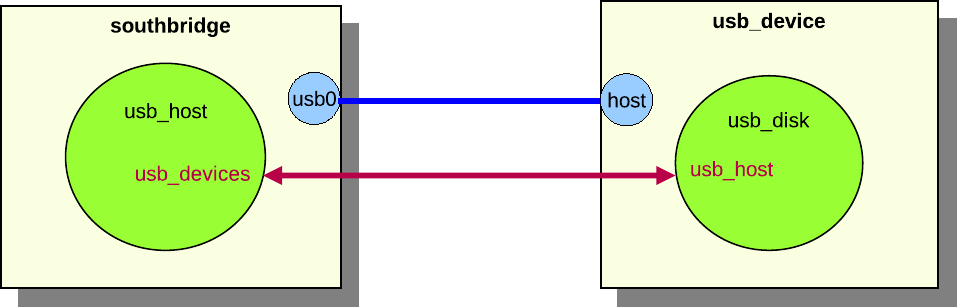

As far as Simics is concerned, interconnects transport a payload with

a possible metadata header from one place to another.

For static routing rules this transport is modeled by adding devices

to the memory map of a processor. Interconnect configuration simply becomes

a matter of editing the mapping.

Interconnects, such as an IOMMU, that restrict access to only a subset of initiators

and also limit access based on privileged level of the CPU core initiator

has to use more advanced techniques than a static memory-map to

model this behavior correctly. See chapter Modeling Interconnects.

From a functional simulation perspective, the part of memory interconnects that is important

to model is the routing rules. How the bits are transported and in what order is out of scope.

Interconnects that do not provide memory access to devices do need to be modeled explicitly.

Typical examples are I2C and Ethernet networks, where it makes sense

to model infrastructure that transports addressed packets around as

an entity in its own right.

Implementing every available function of a system in order match the hardware

is usually not necessary to run a particular software load.

Instead, it is only necessary to implement the functions used

by the software running on the system.

This is commonly the case with integrated chips and SoC devices

which contain more functions than are used in any particular case.

One example: the Freescale MPC8548 SoC was used as a controller chip for a custom ASIC on a custom board. The MPC8548 has a rich set of external connections such as PCI express, Ethernet, I2C, MDIO, and others. In this particular case, the RapidIO functionality of the MPC8548 was not used, and thus that function could be left out from the initial modeling effort for the MPC8548. When other systems appeared that used RapidIO, the function was added.

Another example is the Marvell MV64360 integrated system controller. This controller contains a memory controller for a PowerPC processor, along with some generally useful functions like PCI, serial ports, and Ethernet. Many boards using this controller do not use the built-in Ethernet port, but instead they use an external Ethernet chip connected over PCI. In this case, the built-in Ethernet controller does not need to be included in the model of the board.

Sometimes, the software explicitly turns off such functions, and in such cases one or more control registers have to be implemented that accept the "off" setting and give a warning if any other status is written.

However, a minimum requirement is to document which features are not implemented

in the device model.

It is also good practice to include registers corresponding to unimplemented functionality in the model. Such registers should simply log a warning when they are accessed. This serves to explicitly document design assumptions in the model source code and provides an obvious indication when the assumptions are violated.

Within a device, only the functionality which is actually used by the software should be modeled. This typically means focusing on a few operation modes or functions of a device, and leaving the rest unimplemented, but explicitly so, as discussed below. Often, modeling starts with a completely unimplemented device, looking at how the software interacts with the device to determine what actually needs to be implemented.

For example, a PCI Express bridge like the PEX PLX 8524 can operate ports in both transparent and non-transparent mode. However, if non-transparent mode is not actually used in a system, it can be left unimplemented.

The device model can be simplified by reducing the number of states that the device can be in. Look for states that, from the software's perspective, are only there to optimize performance. Here are some examples:

- SCSI devices can become disconnected, and when disconnected they behave differently than when connected. In most cases, we can ignore the disconnected state, and let the device be connected all the time.

- Some network devices can be put in "early interrupt" mode, where interrupts are sent some time before a buffer fills up, to give the driver more time to handle the data. It may be possible to ignore the early interrupt state, if the driver can handle the load anyway. In this case, it is a good idea to warn the user (using

log unimpl) when the driver tries to put the device in early interrupt mode.

- Some devices cache data to speed things up and provide some means to query whether the cached value is valid. The simplest way to model this is to ignore this state, and always report "no, the cache is not valid".

Even if the software uses a functionality of the hardware, you may be able to use an approximate model. For example, for performance meters and diagnostic functions, which can be complex to implement with full fidelity. Diagnostic registers which are read can often just say that everything is okay. Performance meters can calculate approximate values when they are accessed which satisfies the software.

If you find that the driver reads values from, e.g., a JTAG port, you can look at the driver source code and try to figure out what values it expects to find (look at what it compares the data to), and make the model supply some values that are acceptable.

Sometimes it is necessary to model a bit more. One particular architecture provides interfaces to access parity bits and checksums in the caches. In its boot sequence, the OS performs a self-test on the caches by writing flawed parity bits and checking that the cache handles them gracefully (reporting error or auto correcting the data). The model of this cache thus needs to simulate the parity bits. To increase performance, however, it is sufficient to simulate this only on cache lines where the parity bits have been accessed.

When approximate or invented values are being returned from the model, it is good practice to issue a warning to the user, and/or print a message to the appropriate log file.

Note that an effect of this style of modeling is that even though a device model exists, it might not fulfill the requirements of use in a different system from the one which it was developed for. As time goes on, a device typically gains functionality as it is subject to different uses from different target system configurations and software stacks.

It is easy to fall into the trap of modeling detailed aspects of the hardware that are invisible to the software. The overhead of modeling this detail can significantly slow the simulation. A trivial example is a counter that counts down on each clock cycle and interrupts when it gets to zero. An obvious way to model this is to model the counter register and decrement it on each clock cycle until it gets to zero. Simics will waste a lot of processing resources accurately maintaining the value of the counter. But this is not necessary. The counter is only visible to the software if it is explicitly read. A much better implementation is for the model to sleep until the appropriate time to interrupt arrives. If, in the meantime, the software reads the register then a calculation will need to be done to work out what would be in the register at that point. Since this probably happens rarely, if at all, the overhead of this is minimal.

A good Simics model implements the what and not the how of device functionality. The goal is to match the specification of the functionality of a device, and not the precise implementation details of the hardware. A good example of abstraction is offered by network devices. In the physical world, an Ethernet device has to clock out the bits of a packet one at a time onto the physical medium using a 5/4 encoding process. In Simics, this can be abstracted to delivering the entire packet as a unit to the network link simulation, greatly simplifying the implementation. As far as the software is concerned, this makes no difference.

Timing of the hardware can also often be simplified to make more efficient and simple device models. For example, caches can usually be ignored, since they only affect how long it takes to access memory.

DMA controllers are another example of abstraction. In Simics, DMA is typically modeled by moving the entire block of memory concerned at once and delaying notification to the processor (or other requesting device) until the time when the full transfer would have completed on the physical hardware. The bus contention between the processor and the DMA controller is not modeled, since this is not visible to the software. For a system architect with bandwidth concerns, a more detailed model can be created that logs the amount of data pushed, allowing bandwidth usage to be computed.

Abstraction can also manifest itself by making entire devices into dummy devices. For example, a memory controller might have a large number of configuration registers and reporting registers for factors like DDR latencies, number of banks open, timing adjustments, and similar low-level issues. The effects of these are not visible in Simics, and thus they can be modeled as a set of dummy registers that report sensible values to the software.

Error states is another area which can often be simplified. Error states that do not occur under normal conditions should never be entered. Most errors are hardware induced, e.g., parity errors, failure to read firmware ROM data, etc. These will never occur in Simics, because the virtual hardware is controlled by the simulator. Not having to model these error states simplifies the model.

Sometimes, though, the errors are the interesting parts of the model. If the model is to be used in developing the error handling in a device driver, the error states need to be modeled in more detail (and some means of triggering the errors must be added). Fault-injection in simulated networks is another example.

A nice side effect of Simics-style modeling with focus on the abstract function, is that it makes it easy to reuse models of device functions across multiple implementations of the functionality. As an example, the standard PC architecture contains a cascaded 8259 interrupt controller. Over time, the hardware implementation of the 8259 has changed from being a separate chip to becoming a small part of modern south bridges like the Intel® 6300ESB. But despite this huge change in implementation, the same Simics model can be used in both cases, since the functionality exposed to the software is the same.

Sometimes, abstraction goes too far in an initial implementation, and it is later realized that details have to be added. For example, some Ethernet network device models did not implement CRC error detection but assumed that all packets delivered were correct. When the time came to model a system where the handling of erroneous network packets was a key concern, this was obviously not sufficient. Thus, the handling of CRC computation and flagging CRC errors had to be added to the models.

Once a system has been analyzed and its devices and interconnections listed, it is time to start implementing all the required devices. At this point, reusing existing device models is very important to shorten the modeling time. Simics provides a large library of device models which can be used to quickly fill in large parts of the functionality of a system.

Sometimes, the precise devices are not available in the library, but similar devices are. If your license agreement allows it, you can use the source code to these similar devices contained in source packages as a starting point and adapt and extend them to create a model of the new devices. Such adaptations of existing devices typically follow the path of hardware designers as they design successive generations of products.

The IBM Ethernet controllers found on the PPC 440GP and PPC 440GX SoCs, and also being sold as BlueLibrary IP blocks form one example of how one model has been reused and adapted to successive generations of hardware. Another example is found in Intel® chipsets for Pentium® processors; successive product generation share a significant amount of device functionality, even if the names of the chips change and the functionality is moved around between different chips.

Typically, adapting a device model involves either adding or removing registers, depending on whether moving to a less capable or more capable device. It is also commonly the case that some details in the memory map of the device changes. Thus, the work of adapting a device starts with comparing the programmer's manuals for the old and new device, and determining the following:

-

Identical registers

For an adaptation to be worthwhile, most registers should fall in this category.

-

Superfluous registers

Functions in the existing model which are not found in the new device. These have to be deleted.

-

Missing registers

Must be added to the new device model.

-

Different registers

Registers with the same function or name, but where the bit layout is different between the old and new device.

-

Differences in register layout

The offsets at which various registers are mapped in the device memory map are different.

-

Differences in the number of functions

Some devices contain repeats of the same functionality, and the difference between devices is the number of functions implemented. For example, a different number of DMA channels or Ethernet controller ports. In this case, simply changing a parameter in the device model may be sufficient.

If there are too many differences, it may be more expedient and safer to implement the new device from scratch. As in all software development, doing too many changes to a DML model might be more work to get right than to implement the complete functionality from scratch, maybe borrowing some key source code from the old device model.

Finally, once existing devices have been reused and adapted and all devices not used in the system are ignored, it is time to create device models for the remaining devices. The document that describes how to program a device is often called the Programmer's Reference Manual (PRM) and the basic methodology of writing DML models is that of implementing the PRM.

As previously mentioned, the primary interface between software and the devices is the set of device registers. The PRM defines one or more register banks that contain the registers laid out at specified offsets. The register banks function as an address space for registers, such that one four-byte register may occupy the address locations 0–3, another four-byte register occupies the address locations 4–7, and so on.

The method that many users have adapted when developing a new model is to work in an iterative fashion to determine the registers and functions that actually need to be implemented in a device in order to support software by testing the software with incomplete device models. DML and Simics support a number of techniques for efficiently exploring the needed functionality.

First, a model of the complete register map of a device is created, and registers are marked as unimplemented or dummy or implemented in a limited fashion. This device model is then used with the software, and any accesses to missing or incomplete functionality is flagged by Simics, neatly pointing out precisely what is still missing in the device model.

Any access to an unimplemented register prints a warning message on the Simics command line. The simulation is allowed to continue, since it is possible that the software is content with a default reply.

Dummy registers are registers that the software is using but where the values written can be ignored and any reads return a fixed value (or the latest value written). A typical example is an error counter in a network device, if errors are not modeled, the error counter can be implemented as always reading zero.

For functions which are needed, starting with only a single mode or a subset of the device functionality, and warning when the software moves outside this envelope, is preferred. For example, a timer might initially only support the simple count-down mode required to trigger periodic interrupts to the operating system, and later adding event-counting functions and similar advanced functionality.

Another technique is to hard-wire the results of reading or writing certain registers to an acceptable result. This is typically done based on the observed behavior of the software, providing values that the software likes.

Unlike a dummy register, the eventual goal here is to implement the real functionality of the register. The hard-wired results are mainly used early in development. The logging facilities of DML allow such hard-wired results to be easily located later and upgraded to real implementations.

The goal is to get the target software up and running as soon as possible, so that problems can be found and the risk of development reduced.

Even though you have written tests to test that the device behaves as you expect, you still need to verify that it can run the software which is intended to run on the real hardware.

How do you test a completed model to see if it is both accurate and efficient enough? Basically, you try to find software that stresses the device as much as possible. Try different OS/driver combinations, if this is a requirement. Find programs on top of the OS that exercise the device in different ways. Perhaps there are diagnostic programs that verify that the device functions correctly. If possible, it can be valuable to run the programs on real hardware prior to driving into model or application details on Simics. On more than one occasion developers have debugged a device model only to realize that the software did not work on real hardware either.

Run the selected programs in Simics, with the device model in place, and look for signs of trouble. Such signs may be

- The device reports accesses to unimplemented features. These will need to be modeled.

- The program, OS, or simulated machine behaves strangely, indicating flaws in the device model's functional behavior.

- Simulation performance is poor. The model needs to be made more efficient.

It is also a good idea to sometimes let the tests run for a longer time. This allows you to spot problems with, e.g., memory leaks in the model implementation, or diagnose situations where the driver has entered some fallback mode after seeing too many errors.

If you find a problem, write a test which reproduces it using Simics's test system. Then you can fix the bug and verify that it stays fixed. More information is read in the Debugging User-Developed Simics Modules application note.

Abstraction of time is one of the most important issues in device modeling. Simics already abstracts away some details of the timing of instruction execution and scheduling in the processor, to achieve better performance; see the Understanding Simics Timing application note for more information.

Modern device drivers are often written to be largely independent of the detailed timing of the hardware. This means the device model can alter the timing behavior quite a bit without the driver noticing.

Where possible, the device model should react immediately to stimuli, even though the real device would take some time to finish the effect. This improves efficiency, because the model is invoked fewer times, and simplifies the implementation since there is no need to store transaction state for later or insert things into the event queues.

For example, a model of a network device can send a packet immediately upon request, reading the content directly from main memory rather than, e.g., initiating a DMA transfer to an internal FIFO, waiting for it to fill up, etc. Another example is an address/data register pair, where the real device requires that the data access must not occur within a specified time from the address write. The model does not need to check for this condition, since the driver will always wait long enough before attempting to read or write the data.

It is often useful to have a simple configurable delay for hardware events that take time. Sometimes the software is sensitive to things that occur too quickly (e.g., immediately) in the model compared to the real world. Adjusting a delay attribute is a simple solution for such problems.

Often, hardware reports the completion of an action like a DMA transfer, packet transfer, or similar operation with an interrupt towards the CPU. In general, the timing of such an interrupt should be similar to what one would see on real hardware. Note that some driver software crashes if the response comes immediately, as it is not built to handle an interrupt in the middle of the send routine -- it assumes a certain delay before a completion interrupt and does not protect itself against it.

If the device performs a series of small, related events, it is desirable to cluster these events into larger chunks, even if the simulator cannot respond immediately. For example, in a DMA transfer, rather than moving a few bytes every single cycle, the simulated device can move a whole memory page at a time every N cycles, where N is adapted to give the same overall transfer rate. Again, this means the model is invoked fewer times, and furthermore it will trigger other devices less often.

Continuous events or events that occur regularly should be avoided. Instead, their effects should be computed (based on the elapsed CPU time) when they become visible. The archetypal example of this is a cycle counter: instead of invoking the model to update the value on every cycle, the model should compute the value on demand whenever the counter is read. If the value is read every N cycles, this replaces N increments with one subtraction. If the value is never read, no computation is performed at all.

This principle is valid even if the computation takes as much or more time than the sum of the individual updates: if the value is never needed, it will never be computed, and even if it is, it is usually more effective to optimize the model by reducing the number of invocations than by reducing the time spent in each invocation.

The functionality of Simics can be extended by user-written modules. Modules can, among other things, contain new device models, new commands, new components, and new extension to Simics. All of need to be compiled or built to be used in simulation and the environment in which that is done is referred to as the build environment.

This chapter will describe how to configure, maintain and use that environment to build new modules for use with Simics. A project is used to contain and build user-written modules, this permits many users to share a system-wide (read-only) Simics installation. The Model Builder product contains the necessary files and tools to develop your own modules.

To develop new modules for Simics on the Windows platform, you need the MinGW compiler suite and make tools installed. See the Simics Installation Guide for more information.

If you do not wish to use the GCC compiler, you can use the Microsoft Visual C++ compiler to compile C++ modules (n.b., the Visual C++ compiler is not supported for C modules). See section 3.5.2 for more information.

The commands below should be invoked in a cmd.exe command prompt window, and the path names should be entered with backslashes as directory separators, and bin\make.bat (a shortcut to the MinGW installation) should be used when instructed to run make.

On Linux, the project makefiles require GNU make (a.k.a. gmake), which is available from ftp.gnu.org/gnu/make. In the following text, when you are asked to run gmake, this refers to running the GNU make binary, which may be called gmake or make, depending on your installation.

Simics supplies own version of libraries, for example libstdc++.so.6, under [simics-base]/[host]/sys/lib/ directory. They are actually for machines with older libraries or none at all. If user has a more recent copy than one supplied with Simics, error like "GLIBCXX... not found" would happen when try to run a binary or load a module compiled from this newer library from within Simics. If this happens the suggested workaround is to remove the older library supplied with Simics.

A project is a directory which contains all necessary user-specific files needed to run Simics and develop modules. Setting up a project is done with the File → Create Project… or using the project-setup script, like this:

where [simics] is the location of the Simics-installation.

The script will create a project directory with the following contents:

bin/ | compiler.mk | config.mk |

|---|

doc/ | GNUmakefile | modules/ |

.project-properties | simics | targets/ |

host/ | | |

simics

Starts Simics in command line mode.bin/

Directory with various other tools.doc/

On Linux a directory with links to documentation. On Windows the documentation can be found in the start menu.GNUmakefile

Makefile to build all modules under the modules directory. The file is called GNUmakefile to signify that it requires GNU make. Do not edit this file: you should probably create the config-user.mk or module-user.mk files to change the compilation parameterscompiler.mk

Makefile that selects the C compiler to use by setting the CC variable. A matching C++ compiler will be searched for by config.mk in the same path as CC if CXX is not set.config.mk

Includes [simics]/config/project/config.mk that contains default definition of make flags for different compilers, such as CFLAGS. Do not edit this file: you should probably create the config-user.mk or module-user.mk files to change the compilation parametersconfig-user.mk

Optional file that may contain user defined make variables like D or USER_BUILD_ID. For information about USER_BUILD_ID, see chapter 39.module-user.mk

Optional file that may contain user defined make targets and variables overriding the ones in config.mk and [simics]/config/project/module.mk.modules/

Contains user-developed modules. The default target in GNUmakefile builds all modules in the modules directory.targets/

Contains some pre-configured machines, to be used as examples.- <host>/

The build working directory, which is named after the host type, for example linux64 or win64. The host directory is not present until a module has been compiled. When a module is compiled, any intermediate build files, like dependency and object files (.d .o) are generated in the <host>/obj/modules/<module>/ directory. The resulting module file is placed in <host>/lib/, and the Python command file for the module is placed in the <host>/lib/python/ directory.

.project-properties

For internal use.

When the project has been created, you may type make (or possibly gmake) to build all the modules, or ./simics to start Simics.

In order to rebuild all modules, type make clean, followed by make. In order to rebuild just a single module, type make clean-modulename, for example:

project$ make # builds all modules

project$ make clean-mymodule # removes all objectfiles for "mymodule"

project$ make mymodule # builds "mymodule"

The clean targets only remove object files and similar intermediates for the module not needed when running. To remove the actual module files as well, use make clobber or make clobber-modulename.

The project setup script is used to create and upgrade projects. It can also create module skeletons to start with when writing new devices.

See Simics Reference Manual for the full documentation of the script.

To upgrade your project to a new Simics version, run the script again with no arguments. It is also possible to update the project from the Simics GUI. The project will then be updated to match the version of the running Simics.

It will do the necessary updates in the project, but leave the user-modifiable files intact. (Modified files that need to be overwritten are saved in backup versions with the extension ".~N~" (Linux) or "~N~.backup" (Windows), where N is the first free number.)

If you upgrade to Simics with a different major version number, the compiled modules will not be compatible and you need to do a make clobber to force a rebuild when you issue make.

The modules subdirectory contains source code for modules, one module per directory entry.

To add a DML module to a project, specify the --device option.

This will create some skeleton code under the modules/ directory.

After adding a module, you can build it using the top-level makefile:

project$ gmake

To emphasize that the makefile require GNU Make, it is called GNUmakefile. The sub-makefiles in the module directories are named Makefile.

When running make, command lines will not be printed by default. To see the commands, pass V=1 to make:

project$ gmake V=1

An example module written in C can be added in the same way as DML modules, but using the --c-device option. Similarly, a C++ module can be created using --c++-device.

-

Windows

> cd my-simics-project

project> bin\project-setup.bat --c-device my_c_device

project> bin\project-setup.bat --c++-device my_cc_device

-

Linux

$ cd my-simics-project

project$ ./bin/project-setup --c-device my_c_device

project$ ./bin/project-setup --c++-device my_cc_device

The top-level makefile will automatically attempt to build all modules under the modules/ directory. If you have located a module somewhere else, you must create a symlink or junction to the module from there:

It is recommended to actually copy or move the module into the modules directory of your project.

You may need to adapt the Makefile for the project-based build environment. Use a generated skeleton Makefile as a template for your rewrite.

A module to which the source is distributed with Simics, can be copied into the project by using --copy-module.

The project makefiles are configured to compile optimized modules by default. To turn off optimization and turn on debugging, set the following line in config-user.mk:

D=1

Set D=0 or remove the line entirely to use optimization again.

The D flag can also be passed on the command line:

project$ gmake D=1

The project setup script has a default set of make-variables (CC, CFLAGS, BLD_CFLAGS, etc.), which are set up in the compiler.mk and [simics]/config/project/config.mk files. The compiler.mk may be edited by the user. Additional options can be specified in config-user.mk.

The makefile with the actual build rules for all modules is [simics]/config/project/module.mk. This file is included at the end of each module Makefile. To override rules or variables in this module.mk, add a module-user.mk file in the project, similar to the config-user.mk file described above.

The default set of variables assumes that you will be using GCC. If you want to use a different compiler, you need to change the CC variable in compiler.mk. The flags for the compiler are set up in [simics]/config/project/config.mk.

On Windows host, the Microsoft Visual C++ compiler is supported for compiling C++ modules. To use it, you have to run all project setup and build commands from a Visual Studio command prompt, which can be started from the Start menu.

The exact names of the menu items varies with the version of Microsoft Visual C++ you have installed. For Visual C++ 2008 Express Edition, it defaults to Start menu → Microsoft Visual C++ 2008 Express Edition → Visual Studio Tools → Visual Studio 2008 Command Prompt.

Once you have started the Visual Studio command prompt, set both of the CC and CXX environment variables to cl. This should allow you to run GNU make as usual.

If your compiler is not supported by config.mk, please report to Simics Support.

In order to make the build environment in Simics recognize a module as a build target, there must be a makefile called Makefile in its source directory.

A module makefile must set up a number of make variables and then include the generic makefile for Simics modules. The following is an example of a module's Makefile, for a module written in C:

MODULE_CLASSES=FAS366U

SRC_FILES=esp.c

MODULE_CFLAGS=-DFAS

SIMICS_API = 5

include $(MODULE_MAKEFILE)

A complete list of the available variables to set is provided below:

- DMLC_FLAGS

- (optional) Module-specific

parameters for the DML dependency generator and compiler

- (optional) space-

or colon-separated list of module names (optionally followed by a

subdirectory: modulename/some/path). The source

code directory of this module (or the corresponding subdirectory)

will automatically be added to make's VPATH. The

directories will also be added to the include search path for DML

and C modules (using the -I options to the compilers).

The current project as well as any installed Simics packages will be

searched for the module source code. Note that only the first matching

source code directory found will be used, allowing the project to

override the version of an installed package.

- (optional)

Additional object files to link into the module. The module build

will be dependent on these files, so additional rules can be

provided in the module's Makefile to build these

files before linking them.

- (optional)

Directories to add to VPATH when building.

- IFACE_FILES

- Header files to

compile for an interface module. If IFACE_FILES

contains file-name.h, in a Simics module named

module-name, then the Python module

simmod.module_name.file_name will be

created, containing the Python bindings for all interfaces and

types defined in file-name.h. The filenames in

IFACE_FILES must not include any directory

components; if any files are to be found in another directory,

then that directory must be included in VPATH so the file

is found correctly; e.g., by setting the

EXTRA_MODULE_VPATH variable.

See also section Restrictions in

Defining New Interface Types

in Model Builder User's Guide for restrictions and usage

of Python bindings.

- MODULE_CFLAGS

- (optional)

Parameters for the C and C++ preprocessor and compiler

- MODULE_CLASSES

- Space-separated list of configuration classes that this module

registers. This information is used by SIM_get_class()

to determine that this module should be automatically loaded when

one of the listed classes is requested.

- MODULE_COMPONENTS

- Space-separated list of components that this module

registers.

- MODULE_LDFLAGS

- (optional)

Module-specific flags for the C and C++ linker. Any particular

library linking (such as -lpthread, -lm, or -L to give paths to

search for libraries) can be included here. If the module has

dependencies on LD_MODULE_PATH, it is possible to

specify -rpath so that the module will contain the

paths that were valid at compilation time.

- PYTHON_FILES

- Space-separated list of

Python source files to include. These Python files will be

copied and potentially compiled (see COMPILE_PYC) and placed

in a Python package specific to the module. If

a module my-module includes the file file.py

in PYTHON_FILES, then the resulting Python module

will be available as simmod.my_module.file in Simics. Two

filenames get special treatment if included in

PYTHON_FILES:

- simics_start.py is automatically imported while

Simics is launched.

- module_load.py is imported by Simics when the

Simics module is loaded.

The names checkpoint_update.py and init.py

are reserved for future use, and not allowed in the list of files.

- MODULE_USER_VERSION

- (optional)

User supplied free-text string describing the module version. The

version string is available in Simics even without loading the module,

through the list-modules command or the

SIM_get_all_modules API function.

- SIMICS_API

- (optional)

Simics API to use when compiling the module. See the Simics

Migration Guide for a description on how to compile old

modules with a new Simics version. Valid API settings are listed

in [simics]/[host]/include/api-versions.mk.

- SRC_FILES

- Source files to

compile in the module. C source file names must end in

.c; C++ source file names must end in

.cc, .cpp, .cxx

or .C (the last not allowed on Windows).

DML file names must have a .dml

suffix. Any .py files should be listed

in the PYTHON_FILES variable.

- SRC_IMAGES

- (optional)

Images to copy directly in the images subdirectory in

$(TARGET_DIR)

- SYSTEMC

- If set to 'yes', provides

compiler and linker flags that allow building the SystemC Library adapter

and SystemC devices. See the SystemC Library Programming Guide

for more information.

- SYSTEMC_CORE_CFLAGS

- (optional) Parameters for the C and C++ preprocessor and

compiler when using user-specified SystemC source.

- SYSTEMC_CORE_LDFLAGS

- (optional) SystemC core specific flags for the C and C++

linker. Any particular library linking can be included here.

- SYSTEMC_MODULE_CFLAGS

- (optional) Parameters for the C and C++ preprocessor and

compiler when compiling SystemC modules.

- THREAD_SAFE

- If set to

yes, declare that the module is thread-safe.

- USE_CC_API

- It can be set

to a specific version to select which version of C++ Device API

to use. Current supported versions are '1' and '2'. See the

C++ Device API Programming Guide for more information.

- SUPPRESS_DEVICE_INFO

- If set to

'yes', suppress output of the .xml device info file

by the DML compiler.

- COMPILERS

- An optional

list of compatible compilers, in order of preference. The allowed list

element values are gcc and cl, for MinGW and Visual

Studio respectively. On platforms other than Windows, cl is

ignored.

- COMPILE_PYC

- If this is set

to 1, then the files listed in PYTHON_FILES

are compiled, not copied.

The user can also add new rules to the makefile, after the inclusion of the generic $(MODULE_MAKEFILE). This is usually not needed.

The following variables can be used in the module's makefile (i.e., [project]/modules/<module>/Makefile). They should be considered read-only, i.e., they should not be changed.

SIMICS_PROJECT is the full path to the project directory.TARGET_DIR is the directory in which compiled modules are placed ([project]/[host-type]/lib).SRC_BASE is the full path to the project modules directory ([project]/modules).HOST_TYPE is the Simics host architecture, i.e., what OS/hardware Simics has been compiled for, such as win64 or linux64.LATEST_API_VERSION is the API version you get if 'latest' is specified in the module's makefile.TARGET is the name of the module being compiled. Note that SRC_BASE and TARGET gives you access to the module source code directory, so that you can refer to source code files with absolute paths when necessary, as in $(SRC_BASE)/$(TARGET)/foo.c.

If a Makefile contains C/C++ files in the SRC_FILES section then for these C/C++ modules the writer has to provide an init_local function that registers classes/interfaces/attributes via the corresponding C API functions. The init_local is required if and only if SRC_FILES contains C/C++ sources. This is identified by suffix, .h files do not count as a C files. Refer to the following section on how to define init_local for C++ devices x.

There are a number of C/C++ pre-processor defines that are set depending on the host that the module is being compiled on. They are usually not needed, but useful in some special cases. There are also defines specifying the host architecture and host operating system. All these defines are set in the Simics include file global.h.

It is possible to set a user defined version string in loadable modules. This is done by setting the MODULE_USER_VERSION variable in the module's Makefile. The version string will be printed by the list-modules and list-failed-modules commands.

When Simics starts, it will read the supported architecture and word size for all modules on the Simics module path. Only modules that match the architecture and word size of the running Simics binary will be available for loading into Simics. While scanning the modules, Simics will also check what classes the module will register when it is loaded. This way modules can be loaded automatically when the classes that they define are used in a configuration.

If a module cannot be loaded into the current Simics, it will be added to the list of failed modules. This list can be displayed with list-failed-modules, that takes an optional parameter -v for more verbose output.

simics> list-failed-modules

Current module version number: 1050 Lowest version number supported: 1050

MODULE DUP VERSION USR_VERS LINK

---------------------------------------------

8042 1040

image 1040

spitfire-mmu.so X

The columns after the module name (or file name in the case of a link error) indicate different kinds of errors. An X in the DUP column means that this module could not be loaded because this module has the same name as another module found in the Simics module search path, and that this one was overridden. An X in the VERSION column means that the module was created for another, non-compatible, version of Simics. LINK means that this module cannot be loaded into Simics because of unresolved symbols. Use list-failed-modules -v to see the actual error message from the run-time module loader.

Modules compiled for other architectures or word lengths will not be listed

If a module requires linking against libraries that are not expected to be found on end user's systems, then these libraries can be included in a Simics package. They should be placed in host/sys/lib. For testing and development, this can also be in a Simics project, by adding the project as a package path.

On Windows, Simics will add this directory to the DLL search path for every package.

On Linux, one must also make sure that the Simics module is linked in such a way that the library is found when the module is loaded at run time, by adding this to the module Makefile:

MODULE_LDFLAGS += -Wl,-rpath,'$$ORIGIN/../sys/lib'

On all platforms, Simics will detect and warn if the same library exists in multiple packages. To avoid such problems, one can for example include a version number in the library filename.

Note that the Simics module resolution logic does not apply to these extra libraries.

If an extra library is dependent on the Simics API, it must link with the Simics library in order to make symbol lookup work correctly. This can be done by building the library as part of the Simics module that uses it, and adding these linker flags:

-L$(SIMICS_BASE)/$(HOST_TYPE)/bin -lsimics-common

The Emacs (http://www.gnu.org/software/emacs/) extensible editor is the first choice for many programmers, and Simics Model Builder includes a customized mode for editing DML source files. The DML mode is an extension of the standard Emacs editing mode for the C programming language.

Simics includes the Emacs file dml-mode.el to add support for the DML major mode. This file can be found in the [simics]/scripts directory. To use it, add the following lines to your Emacs configuration file (usually [home]/.emacs or [home]/.xemacs/init.el):

(setq load-path (cons "[simics]/scripts" load-path))

(autoload 'dml-mode "dml-mode" "DML mode" t)

(add-to-list 'auto-mode-alist '("\\.dml\\'" . dml-mode))

(you need to replace the text [simics] in the above with the full path to your Simics Base installation which includes Model Builder). After restarting Emacs, the DML mode should be automatically enabled when you open a file whose name ends in ".dml".

For more information, run the command M-x describe-mode in an Emacs buffer using the DML mode, or read the "Editing Programs" section of the Emacs documentation.

This chapter provides an overview of the workflow used when modeling devices using DML. It starts from an overview of the set up of the build environment, and moves on to actual modeling and testing. After reading this chapter you should have an understanding of the workflow used when developing device models in Simics and be ready for the details provided in the following chapters.

This chapter requires the *Model Builder * product and installation of the *QSP-x86 Package* package which provide all source code and scripts.

This section describes how to set up a functional build environment that will be used to write new devices in DML and create new configurations throughout the remaining sections of the overview. The Simics build environment depends on a working GCC compiler toolchain on Linux and Windows. On Windows, the Microsoft Visual C++ compiler can be used as well, but only for C++ modules. See chapter 3 for details.

DML provides distinct advantages for ease of model creation and performance when compared to alternative modeling languages. The DML compiler (DMLC) translates a device model description written in DML into C source code that will be compiled and loaded as a Simics module. The output of dmlc is a set of C source and header files that can be compiled in the same way as a hand-written C module would be. Refer to the DML 1.4 Reference Manual for details related to the DML language and compiler usage not covered in this introduction.

For those who prefer to create device models in C and Python, details can be found in chapter [x](writing-module-with-c.md) of this document.

The Model Builder product is installed together with Simics Base if a decryption key for it is provided. In the rest of this document the file system path of the Simics Base package directory of your Simics installation will be referred to as [simics], where also Model Builder features may exist, your "home" directory as [home], and your Simics project directory as [project]. The project is where you have all your modules and scripts to set up your system in Simics.

Shell commands are indicated by lines starting with a $ sign; you can use your favorite shell (in Windows, the builtin command prompt should suffice). Most shell commands should be issued from the project directory; this is indicated by lines starting with project$.

On the Windows platform, you need the MinGW tools installed. See the Simics Installation Guide for more information. How to set up a working Simics build environment is described in detail in chapter 3.

Any text editor can be used to write DML code, but we recommend Emacs. The Emacs DML mode is described in section 3.6.

If you install Simics Model Builder with Simics Base package, there will be example source code in [simics]/src/devices/ for many different classes of devices which can be used as the bases for writing new models in Simics. Some of the available examples are listed below:

-

DS12887

A Dallas Semiconductor DS12887 Real Time Clock. It is used in several X86 systems.

-

AM79C960

An AM79C960 Ethernet adapter. It is used in several X86 systems.

-

DEC21140A-dml

A PCI Fast Ethernet LAN controller.

-

sample-device-dml

A very simple DML device including the most common DML object types.

-

sample-pcie-device

A simple PCIe device using the DML PCIe support in Simics.

-

sample-i2c-device

A very simple device using the i2c_device interface.

This section describes how to write a simple memory mapped device and how to load it into Simics and test it. The example device code in this section is based on the sample-device-dml device which can be found in [simics]/src/devices/sample-device-dml/.

The following DML code models a memory-mapped device with a single 32-bit (4-byte) register at offset 0. Upon a read access this device will return the value 42 as the result of the operation, simultaneously printing a Simics console log message with the text "read from counter".

To compile this example device, you first need to set up a Simics project, using the project-setup script (see chapter 3 for details). Type this to setup a project in an empty directory [project]:

The project will contain project-local versions of most simics scripts. It is important to always change directory to your project directory and run the local versions of the scripts, to avoid tampering with your base installation of Simics.

Make sure you have a working MinGW installation, before you try to set up the project. See chapter 3 or the Installation Guide for details.

Pass the --device=device_name flag to the project-setup script to create Makefiles and DML skeleton files for your new device. For example:

You will now have a directory [project] containing (among other things) a GNU Makefile and a subdirectory named modules, which is where your modules are located.

A Simics module is a self contained library of code which can be loaded into Simics. Each module consists of one or more Simics classes each of which implements the some functionality useful in a simulation. This document will use the term device, when referring to a class which is a model of a piece of hardware. Your new device is called simple_device and is located in [project]/modules/simple_device/simple_device.dml. This file is only a skeleton. It implements a single register at offset 0.

The name of the main DML source file should be the module name plus the extension `.dml`. The build system does not require that the DML file nor the module have the same name as the device it is recommended to limit confusion. Additionally a module can contain more than one device though this considered advanced usage.

Now, go to the [project] directory and run GNU make. By default, this builds all your modules.

The program GNU `make` is named `gmake` on some systems. On Windows you can run the script `[project]\bin\make.bat` as a shortcut.

The newly created device model also includes a simple test using the Simics test framework. The test framework provides tools and libraries to make it easy to check that your modules behave as expected. The test framework looks for tests in several directories in your project: test, modules, and targets. We recommend that you place tests for a particular module in a subdirectory of that module's source. For example in [project]/modules/foo/test if your module is named foo. This way the test is kept close to the code it is testing.

You run tests in the project with the [project]/bin/test-runner tool or by using make. The tool can also be used to list all test suites it finds in the project. For complete documentation see the Simics Reference Manual.

When project-setup creates a new DML device, it automatically creates a test suite in the source directory for your module, with an empty test file for your device. You can run the test suite now:

project$ make test

.

Ran 2 tests in 1 suites in 0.680668 seconds.

All tests completed successfully.

We want our device to have a single register, which always reads as 42. To write a test for this behavior open [project]/modules/simple_device/test/s-simple_device.py and change it to look like this:

import dev_util

import conf

import stest

# Create an instance of the device to test

dev = pre_conf_object('dev', 'simple_device')

SIM_add_configuration([dev], None)

dev = conf.dev

# Create a register wrapper for the register

r = dev_util.Register_LE(dev.bank.regs, 0)

# Test that reading from the register returns 42...

stest.expect_equal(r.read(), 42)

# ...even if we write something else to it.

r.write(0x4711)

stest.expect_equal(r.read(), 42)

We can now run our test to check if the device behaves as expected:

project$ make test

f

[project]/logs/tests/linux64/modules/simple_device/test/test.log:1: *** failed () ***

Ran 2 tests in 1 suites in 0.872507 seconds.

Failures: 1 Timeouts: 0

You can look at the log file to get more information about the failure, but the reason is simple: the needed functionality is not implemented yet. The next section will describe how to change the device to pass the test.

Now implement the functionality needed to pass the test. Open the generated skeleton file in your favorite text editor, and modify its contents to look like as follows:

dml 1.4;

device simple_device;

param desc = "sample DML device";

param documentation = "This is a very simple device.";

bank regs {

register counter size 4 @ 0x0000 is (read) {

method read() -> (uint64) {

log info: "read from counter";

return 42;

}

}

}

The read template provides a method, read, which is called when a memory

transaction performs a read at the address of the register. Registers can also

instantiate a corresponding template write, which provides the write

method, which is called for write transactions. Instantiating either of these

templates will override the default behaviour of a register which is to acquire

its read value by reading its fields, or writing its value by writing to its

fields. It's worth looking at the DML 1.4 Reference Manual to find the details

on how defining semantics for fields and registers works.

Rebuild the module and rerun the test. You can do this in a single step since make test automatically builds all modules in the project:

project$ make test

=== Building module "simple_device" ===

DML-DEP simple_device.dmldep

DMLC simple_device-dml.c

DEP simple_device-dml.d

CC simple_device-dml.o

CCLD simple_device.so

.

Ran 2 tests in 1 suites in 0.638387 seconds.

All tests completed successfully.

You can read more about writing functional tests for your models in chapter 17.

Congratulations you have now created your first Simics module. You can find the module binary in the [project]/host/lib directory.

In order to interact with our device from the Simics prompt, we need to create a simple machine with our device (refer to section 29 for more detailed information about Simics configuration scripts). For now, just create the file [project]/targets/vacuum/my-vacuum.simics with the following contents:

run-script "%script%/vacuum.simics"

@SIM_create_object("simple_device", "dev1")

phys_mem.add-map dev1.bank.regs 0x1000 0x100

The script above creates an almost empty machine with our sample device mapped at 0x1000 in the phys_mem memory space. Please note the dev:port syntax, which is the preferred way of mapping a bank in memory.

We can now start the newly created machine configuration and interact with our device:

project$ ./simics targets/vacuum/my-vacuum.simics

simics> phys_mem.read 0x1000 -l

This returns value of 42 and causes the log message "read from counter" to be printed.

DML has direct support for writing log messages to the Simics logging facility, through the log statement. The most important logging concepts are the type and the verbosity level of the message. The most common message types are info and error. The verbosity level is a number between 1 and 4, where 1 is used for important messages that should always be displayed, and 4 is used for detailed debugging messages that should only be printed when verbose logging has been requested. Error messages are always printed regardless of verbosity level setting. By default, Simics only displays messages of level 1 on the console.

In the previous example, no level was provided, which will make it default to 1. To set the level of a message, add it after the type string, but before the colon, as in:

log info, 2: "This is a level 2 message.";

To change what messages are displayed, use the log-level command.

simics> log-level 4

This will cause all log messages with log-level 1-4 to be displayed. Now make a memory access to the device, as before:

simics> phys_mem.read 0x1000 -l

This time (apart from the "read from counter" message), you should see an info message saying something like

"Read from register regs.counter -> 0x2a". This is logged by the built-in code that handles register read accesses,

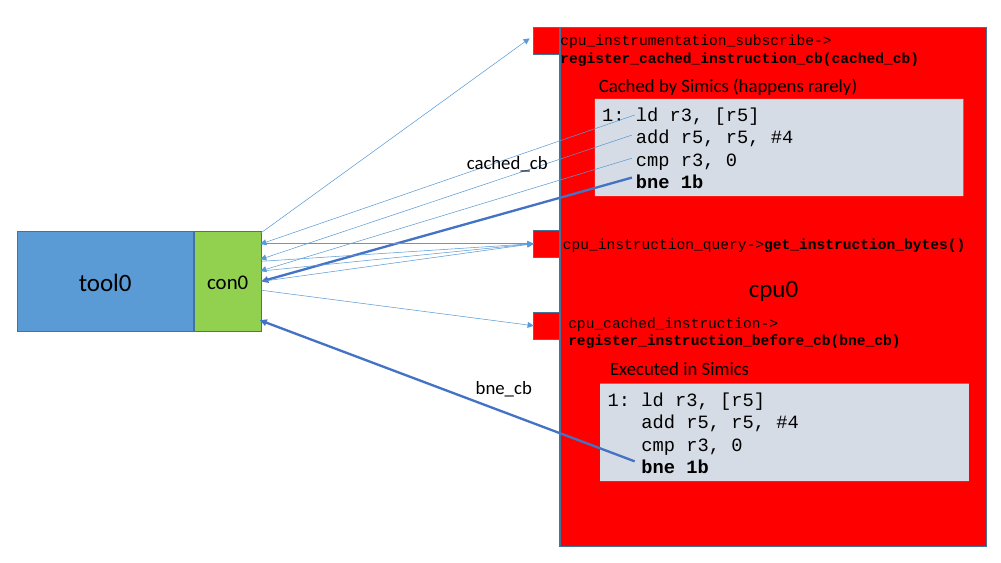

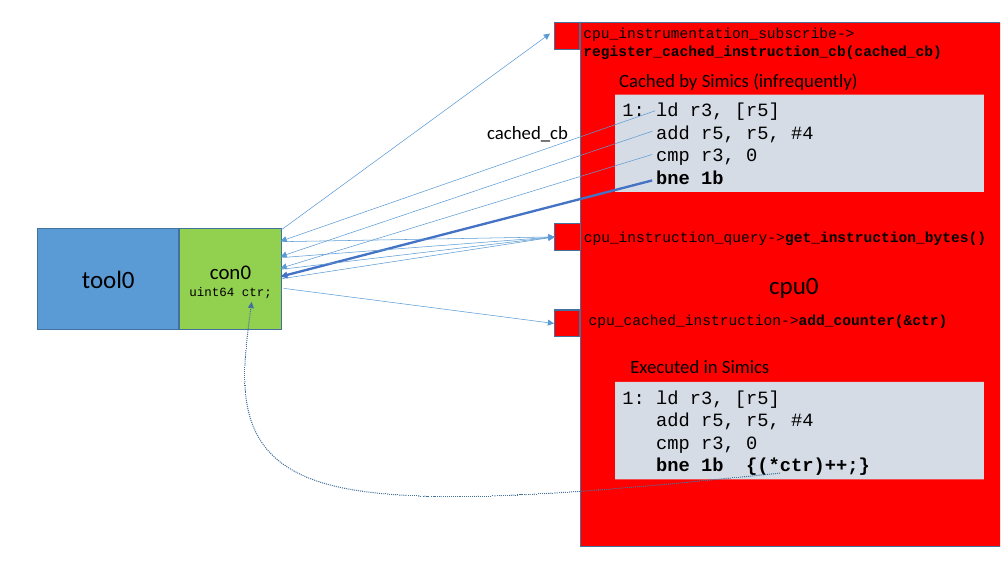

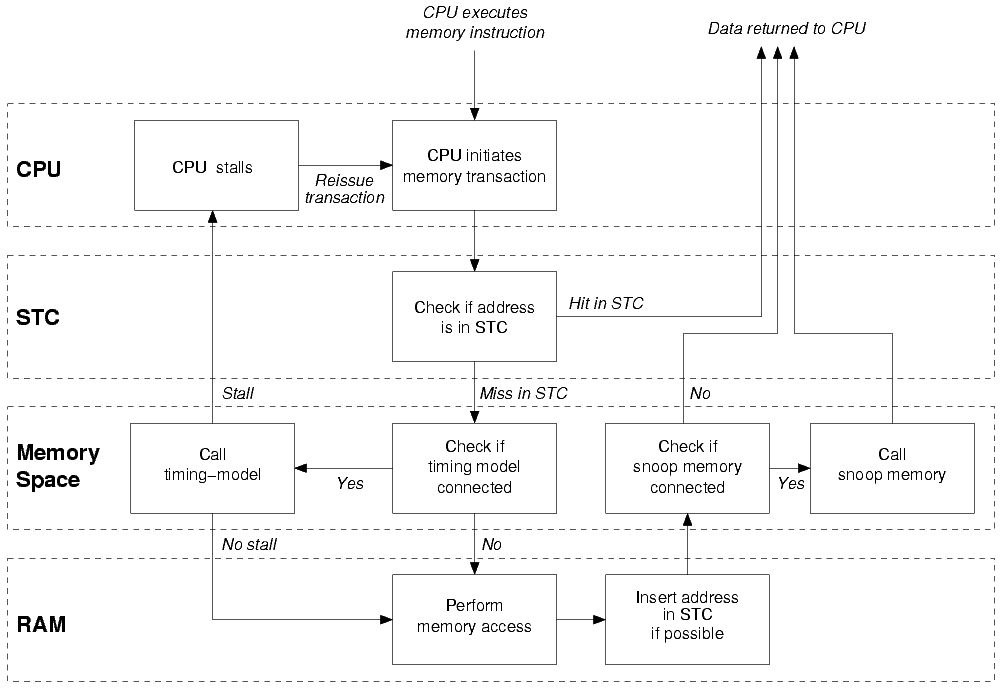

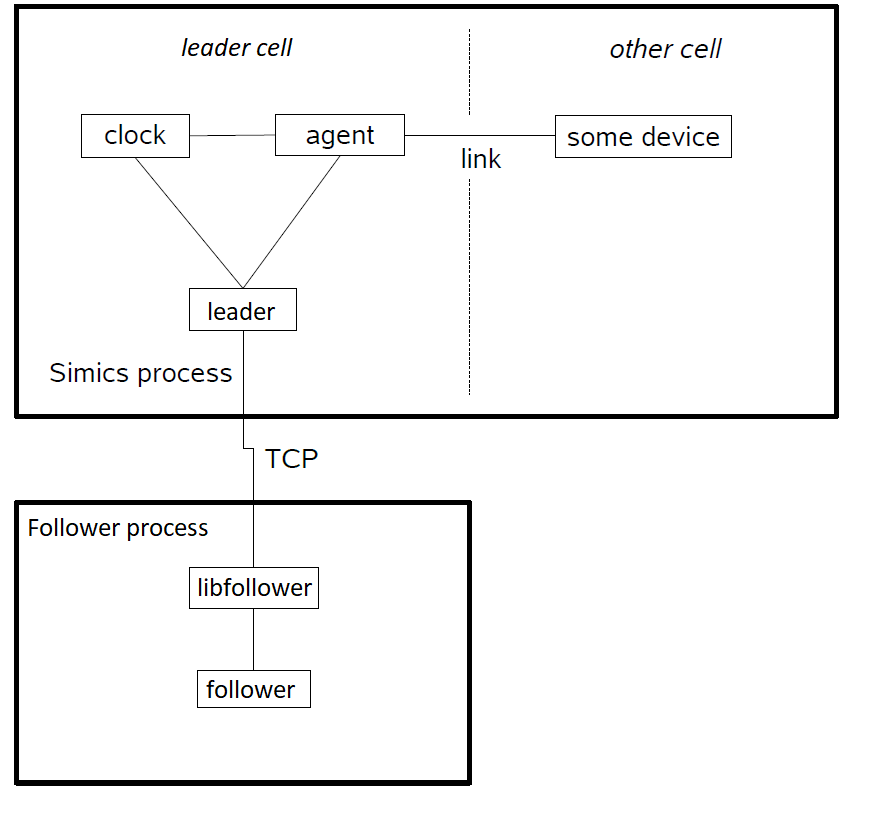

and such messages can be very useful when debugging a device model.